In today’s digital world, where thousands of users access the same application or website at the same time, managing traffic is a big challenge. Imagine what would happen if every user tried to send hundreds of requests per second — your server might slow down or even crash!

That’s where Rate Limiting comes in. It’s a simple but powerful concept used to control how many requests a user, system, or service can make within a certain period of time. This ensures fair usage, protects your backend systems, and improves overall reliability.

Let’s understand what it means and explore the main algorithms and types of rate limiting — with easy examples you can relate to.

What is Rate Limiting?

Rate limiting is like setting a “speed limit” for your APIs or services.

For example, imagine a toll booth that allows only 10 cars per minute to pass through. If the 11th car comes, it has to wait until the next minute begins.

Similarly, in software systems, rate limiting helps control how frequently someone can access an API, website, or online resource.

Common reasons for using rate limiting:

- To prevent abuse or spamming

- To avoid server overload

- To ensure fair access for all users

- To manage costs and resources efficiently

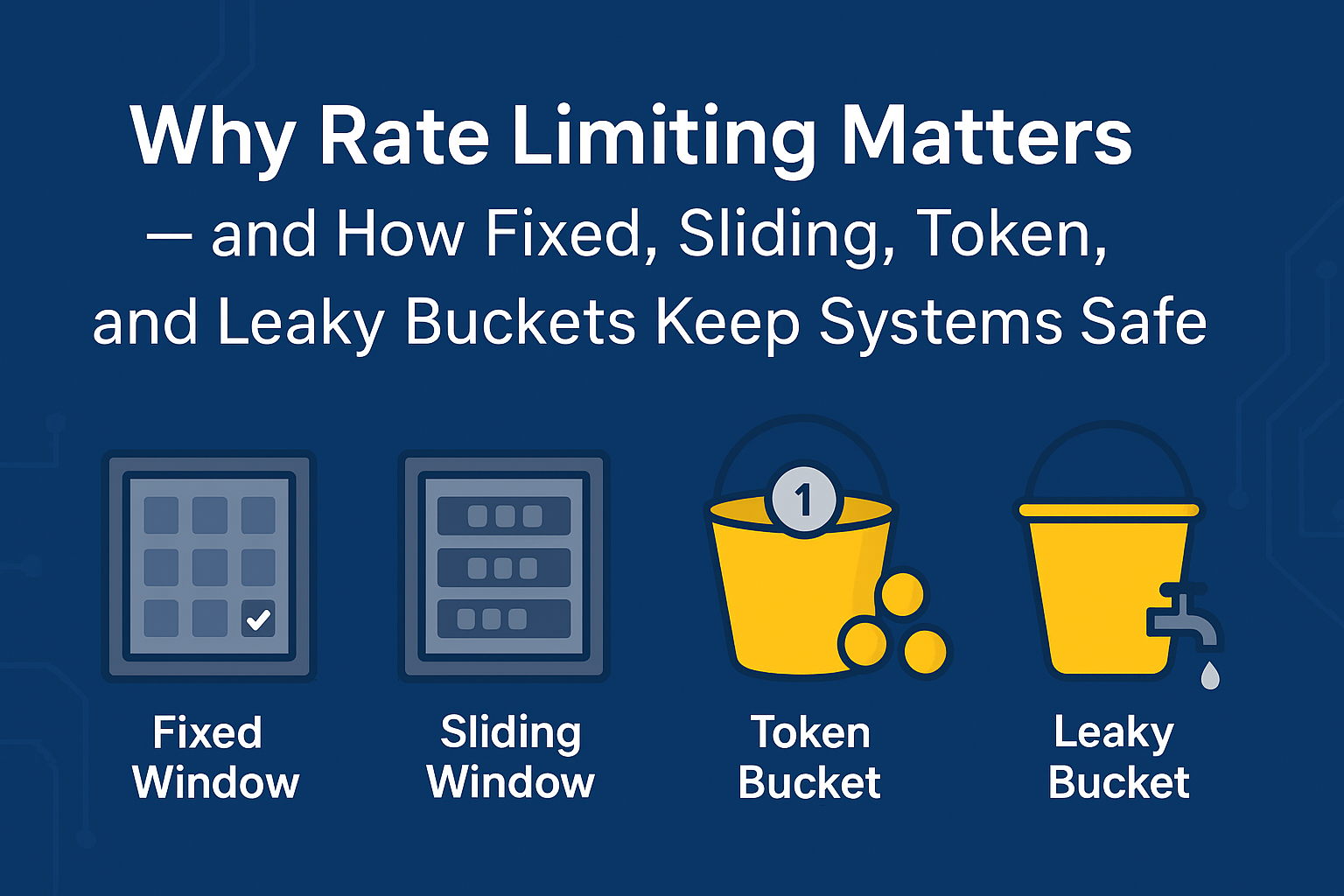

Types of Rate Limiting Algorithms:

There are several techniques used to apply rate limiting. Each one works a bit differently, depending on the use case. Let’s go through them one by one.

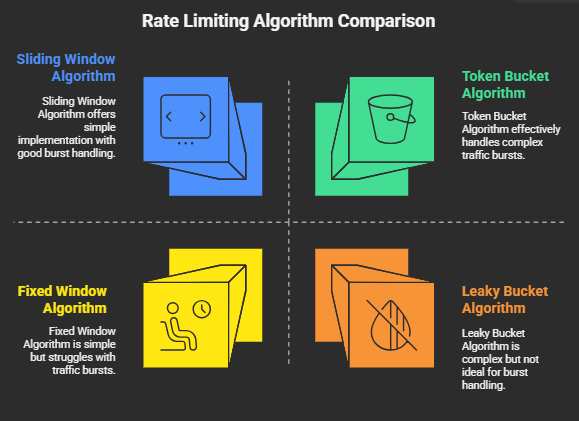

Fixed Window Algorithm

In the Fixed Window method, time is divided into equal intervals, for example, every minute or every second. The system counts how many requests come within that time window. If the number exceeds the allowed limit, all extra requests are blocked until the next window starts.

Example:

Suppose your login API allows 10 requests per minute. If a user sends 10 requests between 12:00:00 and 12:00:59, any additional requests will be blocked until 12:01:00.

Pros: Simple to implement.

Cons: Not accurate when requests come at the edge of two windows (called “bursting”).

Sliding Window Algorithm

The Sliding Window method improves on the Fixed Window approach. Instead of resetting the counter at fixed intervals, it constantly “slides” the window over time. This means the system checks requests in real time for the last X seconds or minutes.

Example:

If you are allowed 10 requests per minute, and you send 5 at 12:00:30 and 5 more at 12:00:45, then by 12:01:30 the first 5 requests expire — allowing you to send 5 new ones.

This makes the system more fair and smooth, avoiding sudden drops or spikes in traffic.

Token Bucket Algorithm

Think of a Token Bucket as a bucket filled with a certain number of tokens. Each request takes one token. Tokens are added to the bucket at a fixed rate over time.

When the bucket has tokens, requests are processed instantly. If the bucket is empty, requests must wait until new tokens arrive.

Example:

A bucket can hold 10 tokens, and 1 token is added every second.

You can make 10 requests immediately (using all tokens) and then wait for tokens to refill gradually.

This algorithm is great when your application needs to handle occasional bursts of traffic without breaking limits.

Leaky Bucket Algorithm

Imagine a bucket with a small hole at the bottom. Water (requests) flows in from the top, but it leaks out at a steady rate from the bottom. If too much water enters too fast, the bucket overflows — meaning extra requests are dropped.

Example:

If your bucket can hold 10 requests and processes 1 per second, then sending 20 requests quickly will drop 10 of them.

This ensures your system processes requests at a constant, stable rate.

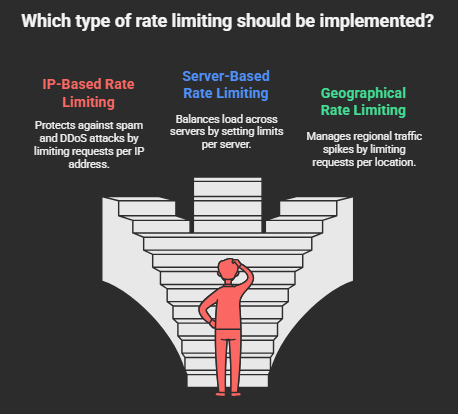

Types of Rate Limiting Based on Source:

Apart from the algorithms, rate limiting can also be categorized by what criteria you use to apply limits. Let’s look at the main types:

IP-Based Rate Limiting

This is one of the most common types. It limits how many requests can come from a single IP address within a given time frame.

Example:

A website may allow 100 requests per minute per IP. If a single IP tries to exceed that, the system temporarily blocks it.

This helps protect against spam, DDoS (Distributed Denial of Service) attacks, and brute-force login attempts.

Server-Based Rate Limiting

In large systems with multiple servers, rate limiting can be applied per server. Each server has its own limit for how many requests it can handle.

Example:

If there are 3 servers and each one allows 500 requests per minute, then your total system can handle 1,500 requests per minute.

This is useful for load balancing and ensuring that no single server gets overloaded.

Geographical-Based Rate Limiting

Sometimes, it makes sense to apply limits based on user location (country, region, or city). This is called Geographical Rate Limiting.

Example:

A global voting app might allow only 100 votes per minute from one country to ensure fairness and prevent one region from dominating the results.

This method helps manage regional spikes in traffic and maintain balance across global users.

Summary:

Algorithm / TypeHow it Works Example

- Fixed Window Counts requests in fixed time slots | 10 requests per minute

- Sliding Window Tracks requests in a real-time moving window | Fairer distribution

- Token Bucket Uses refillable tokens | Handles burst traffic

- Leaky Bucket Processes at steady rate | Smooth, consistent flow

- IP-Based Limits per user IP | Prevents spam or brute force

- Server-Based Limits per server | Helps load balancing

- Geographical-Based Limits by region | Controls global traffic

Conclusion:

Rate limiting is like traffic control for your servers. It doesn’t just prevent misuse — it keeps your systems running smoothly, fairly, and efficiently.

Whether you use a Token Bucket for flexibility or a Leaky Bucket for steady processing, rate limiting is an essential part of modern web development and API design.

By understanding and implementing it correctly, you ensure that your system remains fast, reliable, and secure — even when traffic spikes.