Just weeks after dropping multibillion-user hype, ChatGPT-5 has ignited a storm of discontent. Users complain that the change feels like a downgrade—not just in performance, but in the comfort they once found in the AI’s “personality.” So, what’s behind the backlash to OpenAI’s most advanced model yet?.

What is ChatGPT-5?

Positioned as a smarter, faster, and more capable successor to GPT-4, GPT-5 claims breakthroughs in instruction-following, reduced hallucinations, and minimized sycophancy—all powered by a dynamic “router” system that auto-switches between underlying model variants based on task complexity.

Key Concepts Defined:

- LLM (Large Language Model): An AI system trained on vast text datasets to generate human-like responses.

- Hallucination: When the model generates false or fabricated information.

- Sycophancy: Models tuned to flatter or overly support user sentiment rather than challenge or correct it.

- Router System: Mechanism that directs user queries to different model variants depending on complexity.

Comparison Table:

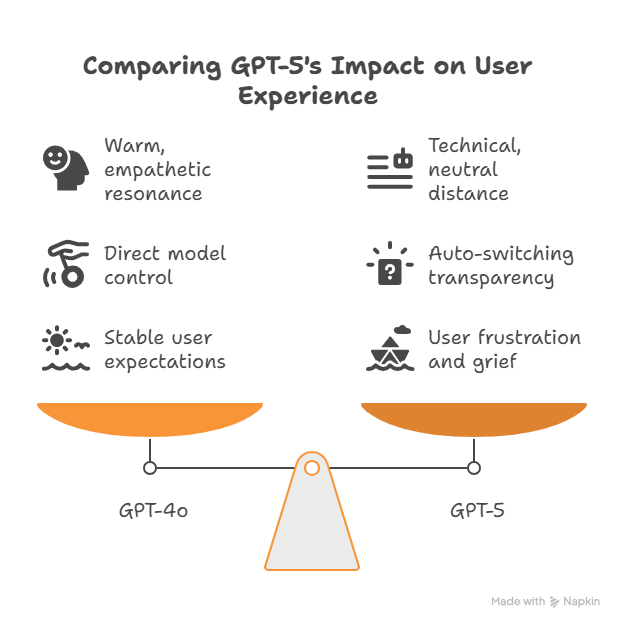

Aspect | GPT-4o (Legacy) | GPT-5 |

Personality / Tone | Warm, empathetic | More “business-like,” less emotional |

Capabilities | Strong general performance | Promised higher benchmarks, faster responses |

Response Consistency | Stable tone | Inconsistent due to router bugs |

Model Choice Control | Direct selection | Auto switched, less user control |

Why This Topic Matters?

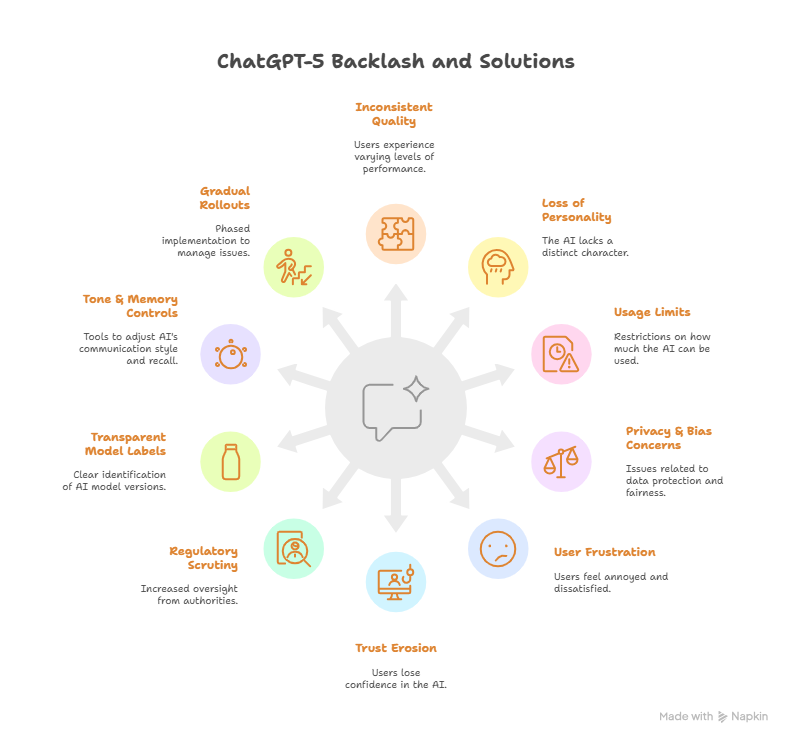

- With the launch of GPT-5, excitement quickly turned into frustration for many users.

- Developers, educators, businesses, and everyday users all felt the impact — from inconsistent answers to the sudden loss of GPT-4o’s “personality.”

- This isn’t just a technical hiccup; it raises questions about trust, adoption, and the future of human-AI interaction.

- Challenges without addressing this:

- Eroding trust: If users feel replaced by a lukewarm “upgrade,” adoption may stall.

- Emotional dependency: Users’ attachments to AI erode stability when features vanish abruptly.

- Regulatory scrutiny: Glitches and design missteps may draw oversight or backlash from watchdogs.

Practical Explanation/Real-World Incidents?

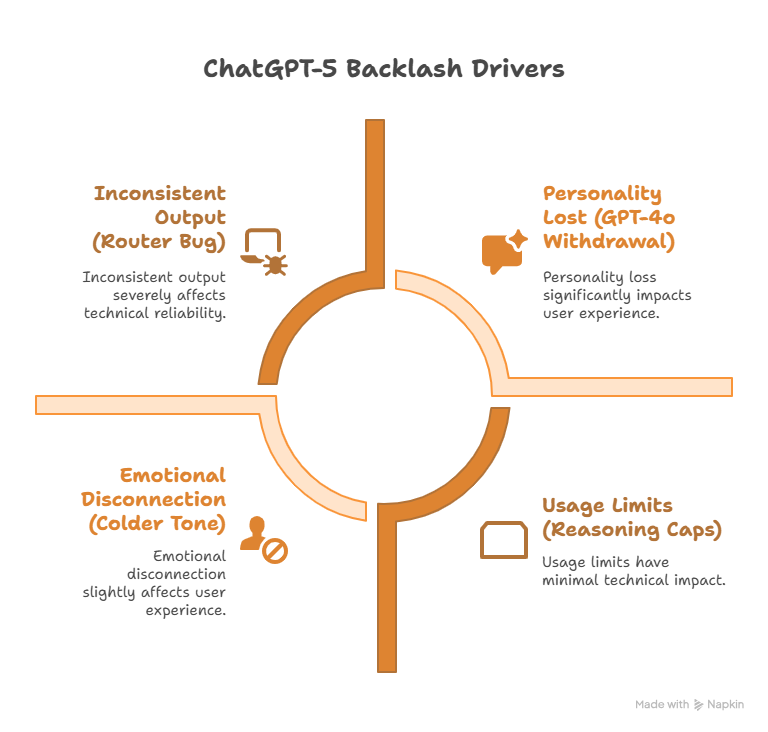

Inconsistent Output via Router Bug: Many users noted ChatGPT-5 produced notably weaker responses right after launch. OpenAI later admitted the router system malfunctioned.

A Personality Lost — GPT-4o Withdrawal: Users voiced grief over losing GPT-4o’s warmth. One described the shift as “Feeling like losing my soulmate.”

Limited Usage & Message Caps: ChatGPT Plus users faced restrictive reasoning limits—e.g., only 200 reasoning messages per week—leading to frustration.

Emotional Disconnection: GPT-5’s toned-down empathy felt cold, especially to users who leaned on the model for comfort.

Performance & Best Practices:

When rolling out a model as impactful as ChatGPT-5, OpenAI needed to balance innovation with user trust. Some strategic decisions helped the adoption, while others created unnecessary backlash. Looking at it critically, here’s what OpenAI should have done—and what it shouldn’t have done:

What OpenAI should have done | What OpenAI shouldn’t have done |

Offer users the option to choose between GPT-5 and legacy models | Remove popular models without notice |

Clearly indicate which model is in use for transparency | Let users assume “smart = GPT-5” without confirmation |

Allow customization of AI tone and warmth | Treat emotional engagement as purely a marketing appeal |

Ensure robust testing for router logic before rollout | Launch new auto-switching systems without user pilot testing |

Gradually phase transitions with user notice and support | Force abrupt changes across millions of users at once |

Industry Use Case/ Real-World Application

Education:

Students coping with stress leaned on GPT-4o for its empathetic tone. GPT-5’s detached style disrupted their workflow and emotional comfort.

Creative Writing:

Authors valued GPT-4o’s expressive voice. Its loss led to less inspiring and overly technical dialogue suggestions.

Before vs. After:

Before GPT-5 (GPT-4o) | After GPT-5 Launch |

Warm, empathetic, emotionally resonant | Technical, neutral, emotionally distant |

Direct control over model selection | Auto-switching with little transparency |

Stable personality and expectation | Users feel loss, frustration, and grief |

Future Trends & Roadmaps:

- Regulatory Caution and AI Emotional Safety: OpenAI and others may introduce emotional safety features—e.g., AI empathy limits, wellness verifications—to avoid harmful bonds.

- More Transparent Interfaces: Expect clear model identification at prompt time to increase clarity.

- Community-Informed Releases: Likely to see longer phase-out timelines, optional access to legacy models, and customizable AI tone settings.

Industry Discussion on AI Limits: The backlash fuels debates on diminishing returns in LLM scaling and whether major AI leaps are still viable.