A Secure & Scalable Oracle Connection Strategy in Databricks Using OJDBC and Azure Key Vault

Difference between Data Science and Machine Learning [2025]

Knowing the difference between data science and machine learning is important for businesses and professionals. This knowledge helps them stay ahead in the AI-driven world. Data science focuses on extracting meaningful insights from structured and unstructured data. Machine learning enables systems to learn from data and make predictions using algorithms without explicit programming. Data science […]

Delta Lake Speed-Up: Z-Order on Single vs. Multiple Columns

Introduction As organizations ingest massive volumes of data into Delta Lake, query performance becomes critical, especially for dashboards, ad-hoc analysis, and downstream ETL jobs. One powerful technique to reduce query latency and improve data skipping is Z-Order Optimization. In this article, let’s cover: What Z-Ordering is How to apply it to single vs. multiple columns […]

Data Visualization 2025: What It Is & Why It’s Important

Data visualization enables complex information to become graphical representations such as infographics, maps, graphs and charts amid the global exponential increase of data. This change allows business people and researchers to show data better. It helps improve communication, decision-making and understanding. Data visualization tools enable companies and data analysts to identify patterns and correlations hidden […]

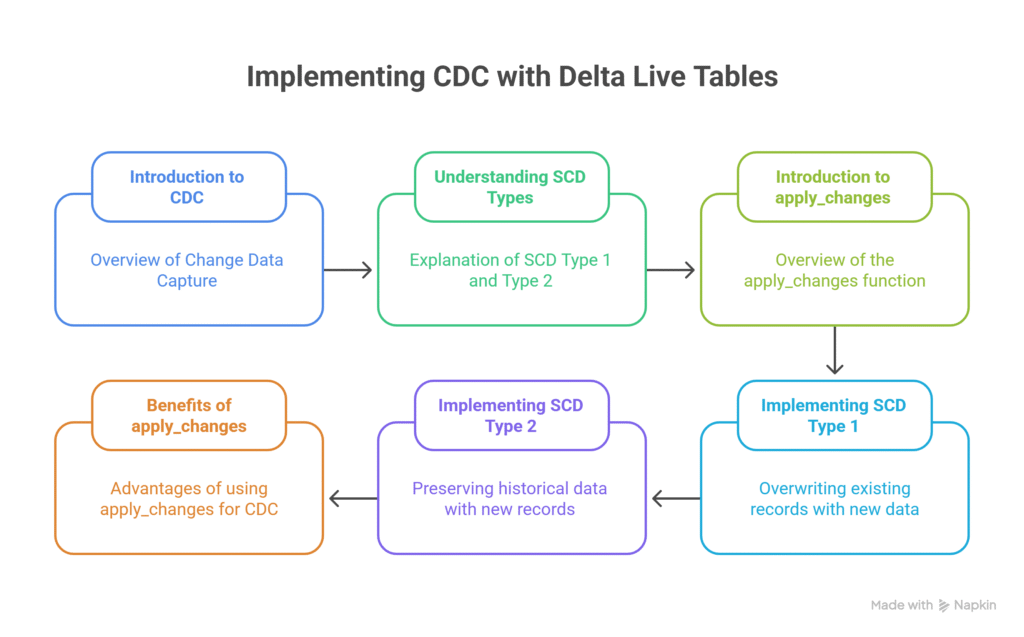

Accelerating Change Data Capture with apply changes in Delta Live Tables (DLT): Simplifying SCD Type 1 & 2 Implementation

Introduction: Change Data Capture (CDC) is a crucial component of modern data engineering, enabling efficient tracking and processing of data changes from source systems. Traditionally, implementing CDC required complex and error-prone merge logic. With Delta Live Tables (DLT) in Databricks, CDC can now be implemented in a declarative, scalable, and reliable manner using the apply_changes […]

Data Lakehouse 2025: What It Is & How Does It Work?

Today, in the world of fast data, the data lakehouse is the most promising approach to modern data. In the context of extensive data sets, organizations have to deal with vast amounts of structured and unstructured data. In 2025, the data lakehouse will be the only data platform. It will provide both flexibility and structure […]

A Unifying Tool For Deployment Of Databricks

Overview Databricks Asset Bundles are a way to develop, package, version, and deploy Databricks workspace artifacts (like notebooks, workflows, libraries, etc.) using YAML-based configuration files. This allows for CI/CD integration and reproducible deployments across environments (dev/test/prod). What are Databricks Asset Bundles Databricks Asset Bundles are an infrastructure-as-code (IaC) approach to managing your Databricks projects. […]

Accelerating Incremental Data Ingestion with Databricks Auto Loader and Delta Live Tables

Introduction: In today’s data-driven world, enterprises handle massive amounts of continuously arriving data from various sources. Traditional batch ETL jobs, while effective, often lead to inefficiencies, delays, and operational overhead. Databricks Auto Loader and Delta Live Tables (DLT) provide a powerful solution for incremental data ingestion and pipeline automation. Auto Loader simplifies real-time and batch […]

From Queries to Insights: Discovering Databricks AI/BI Genie

With the advancement in data science, intuitive and AI-powered analytics are in more urgent need than ever. Databricks has introduced a revolutionary tool known as AI/BI Genie in the business intelligence realm. In this research, a simple, user-experience-friendly interface to make complex data questions easier, deliver real-time insights, and integrate into the Databricks Lakehouse Platform […]