Introduction

In today’s fast-paced data-driven world, real-time data processing and change data capture (CDC) are crucial for businesses to make timely and informed decisions. Databricks, a powerful cloud-based analytics platform, combined with Debezium, an open-source CDC tool, enables seamless real-time data replication and transformation. This blog will explore Databricks and Debezium, detailing their integration and providing a hands-on guide to get started.

Understanding Databricks and Debezium

What is Databricks?

Databricks is a unified data analytics platform built on Apache Spark that facilitates big data processing, machine learning, and collaborative analytics. It enables users to work with structured and unstructured data, offering powerful ETL, SQL, and machine learning capabilities.

What is Debezium?

Debezium is an open-source distributed platform that captures and streams changes from databases in real-time. It is based on Apache Kafka and provides CDC capabilities for various relational databases, including MySQL, PostgreSQL, SQL Server, and more.

Why Use Databricks with Debezium?

The combination of Databricks and Debezium offers several advantages:

- Real-time Data Streaming: Continuous data replication from databases to Databricks.

- Scalability: Handles large volumes of data efficiently.

- Event-Driven Architectures: Enables event-driven applications and microservices.

- Data Lake Integration: Captures changes directly into Delta Lake for analytics.

- Cost Optimization: Reduces costs by eliminating batch jobs for incremental updates.

Setting Up Databricks with Debezium: Hands-On Guide

Step 1: Install and Configure Debezium

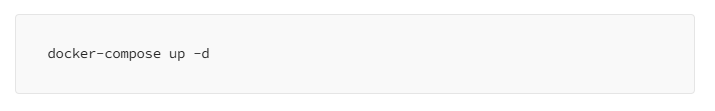

1. Set Up Apache Kafka and Zookeeper:

Ensure Kafka and Zookeeper are running properly.

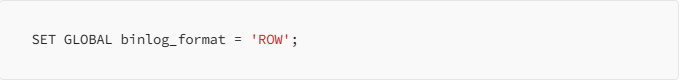

2. Configure Debezium Connector for MySQL:

Create a MySQL database and enable binary logging.

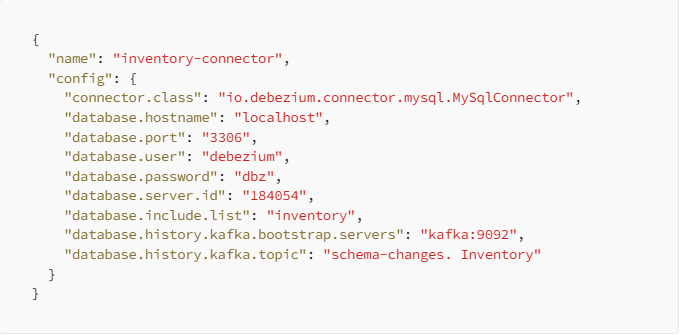

3. Deploy Debezium Connector:

Send this configuration to the Kafka Connect API.

Step 2: Configure Databricks

- Create a Databricks Cluster:

a. Log in to Databricks.

b. Navigate to Clusters and create a new cluster. - Install Required Libraries:

a. Installorg.apache.spark:spark-sql-kafka-0-10_2.12b. Install

debezium-connector-mysql - Consume Kafka Topics in Databricks Notebook:

Step 3: Simulating Sample Data Changes

To better understand how CDC works, insert sample data into the MySQL database and observe changes in Databricks.

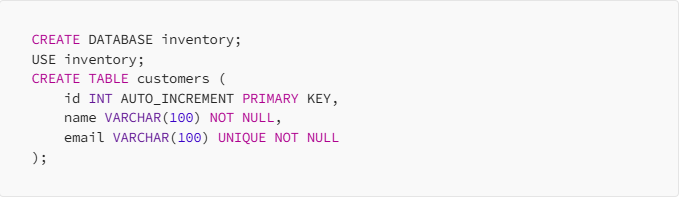

- Create a Sample Table in MySQL:

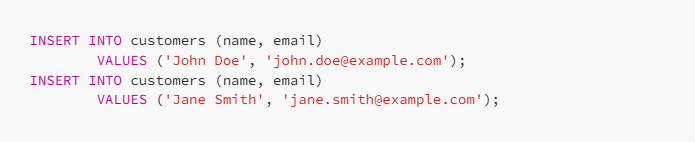

2. Insert Sample Records:

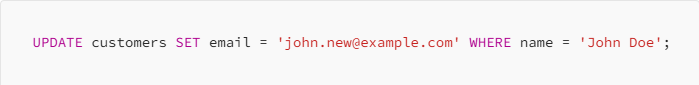

Update a Record:

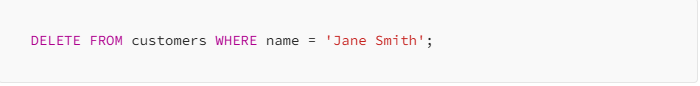

Delete a Record:

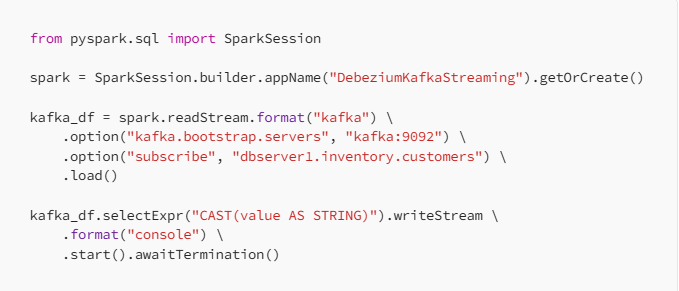

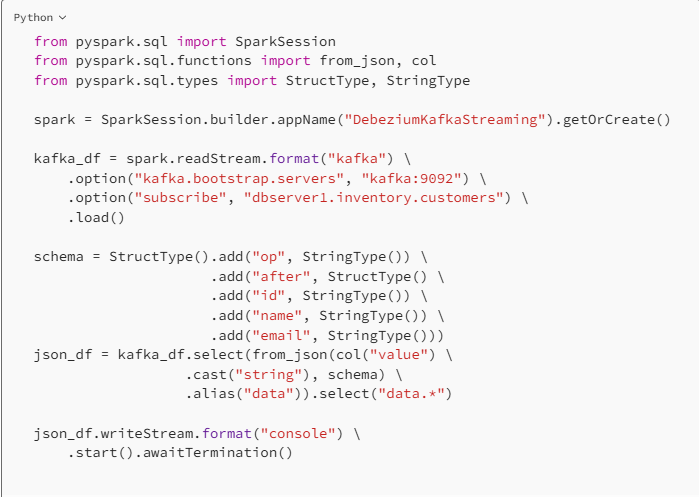

Step 4: Consume Kafka Topics in Databricks Notebook

- Read the CDC Stream in Databricks:

2. Store Processed Data in Delta Lake:

Conclusion

Integrating Debezium with Databricks enables real-time data ingestion, transformation, and analytics. This hands-on guide demonstrates how to capture CDC events, process them in Databricks, and store structured data in Delta Lake. By leveraging this setup, organizations can make real-time, data-driven decisions, enhancing their data analytics strategies.

Would you like to explore more advanced use cases such as integrating with machine learning models or building real-time dashboards? Let us know in the comments!