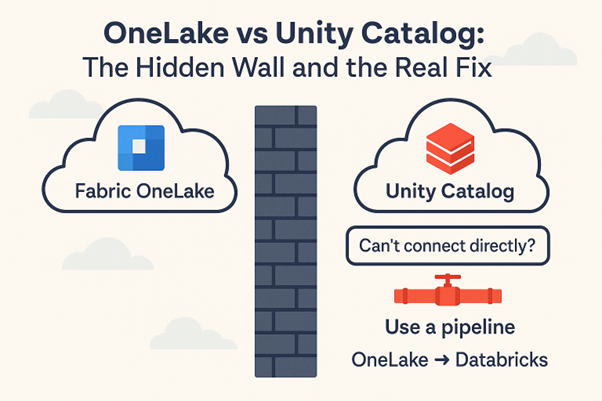

These days, many teams use Microsoft Fabric OneLake for unified storage and Databricks Unity Catalog (UC) for data governance and analytics. But here’s the catch: when you try to connect them directly, you hit a wall. You can’t simply register a Fabric Lakehouse as an external location in Databricks Unity Catalog like you would with a normal ADLS Gen2 folder.

The Issue — Different Control Planes:

OneLake is a managed data lake built on ADLS Gen2 but tightly controlled by the Fabric service layer. It handles shortcuts, governance, and workspace security inside Fabric — not directly in Azure Storage accounts you manage.

In contrast, Databricks Unity Catalog requires direct access to raw storage endpoints (dfs.core.windows.net) to control schemas, transactions, and permissions at the file level. These are two different control planes with no shared connector for governance.

Both fabric and Databricks control plane and management are different, so we can’t directly connect to the onelake to catalog connection

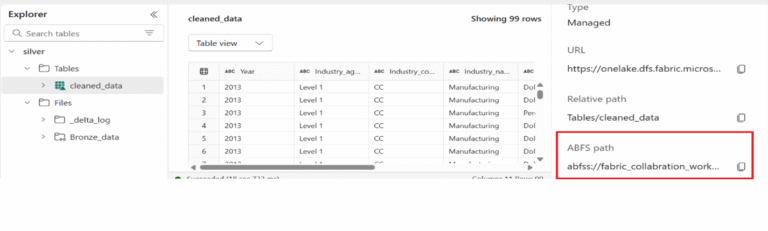

Fabric abfss not supporting reading the data in Databricks:

You can find the fabric abfss path by right-clicking the table -> properties -> path

But Databricks Spark does not recognize dfs.fabric.microsoft.com — it only knows how to read standard ADLS Gen2 (dfs.core.windows.net). Fabric hides the real storage account for security, so you can’t just mount or read it directly.

Any external tools to connect OneLake with Databricks?

Yes, for connecting OneLake with Databricks, different external tools are available. One of the best tools is CData Sync, using tools like CData Sync to set up automated, continuous replication of data from OneLake to Databricks. But the problem is that these tools are paid for

Learn more about CData: https://www.cdata.com/kb/tech/odata-sync-onelake.rst

Right now, there’s no free tool or connector to directly sync or register OneLake folders inside Unity Catalog. Some options like Azure Data Factory or Fabric Pipelines can help you copy the data out — but that’s still a physical move, not a live mount.

Mirroring:

Fabric does have a good option called Mirroring, but this only works the other way around — you can mirror data into Fabric from other external sources. But you can’t mirror OneLake out to Databricks Unity Catalog.

Practical Solution — Use a Pipeline to Migrate:

Since direct mounting isn’t possible from fabric to Databricks so the only step to migrate the data is using a Pipeline. Mostly, it is my review. It is also not a recommended method, but currently, there is no other method to migrate.

Here, Our Idea is using Fabric Pipeline, moving onelake data to ADLS Gen2 Storage, from that reading and moving to Databricks

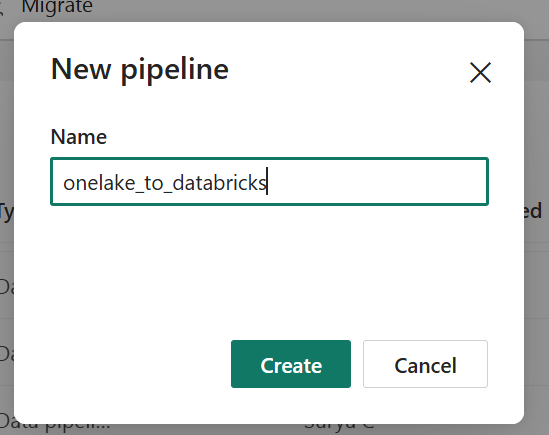

Step 1: Create a new Pipeline in Fabric

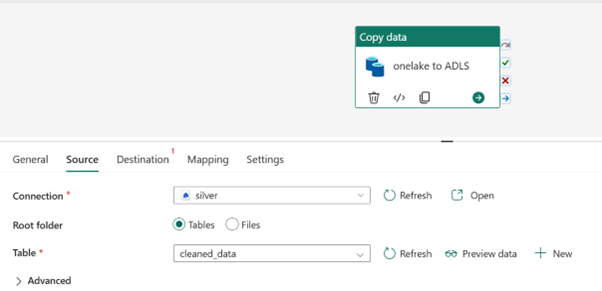

Step 2: In the source, configure the table

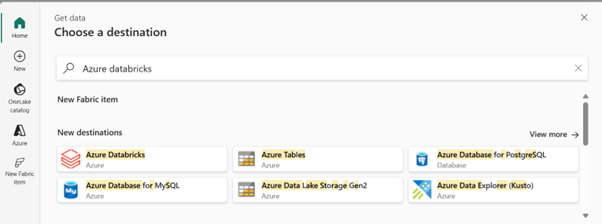

Step 3: In destination search, ADLS or Azure Databricks, in this demo, we are going to choose Databricks

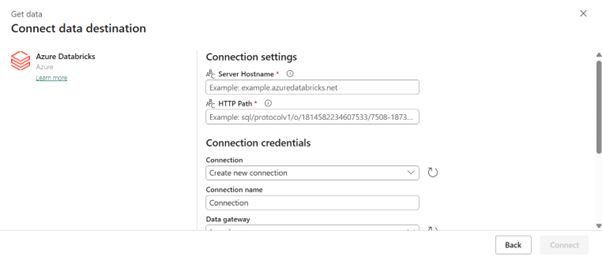

Step 4: For connecting the Databricks catalog as a destination, enter the following details

- Server Hostname

- HTTP Path

- Personal Access Token

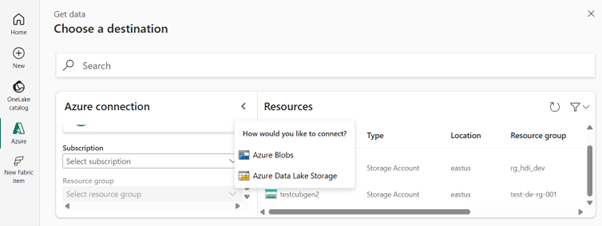

Alternative Method: If direct Databricks Connect is not suitable for your project, you can connect the destination as ADLS Gen2, allowing us to read the data directly from Databricks into the Unity catalog.

Final Words: Build the Bridge, Don’t Fight the Wall:

When I tried this, I thought we could connect Fabric OneLake directly to Databricks Unity Catalog. But in real life, it doesn’t work like that. OneLake has its own security, and Databricks can’t see it directly.

So, the only way that works now is to copy the data from OneLake to an ADLS Gen2 storage account that Databricks can use. You can do this with a Fabric Data Pipeline. After that, Databricks Unity Catalog can read it like any other external data.

It’s not the perfect solution, but for now, it’s the only simple way to move the data from Fabric to Databricks.