The Hidden Crisis in AI: When 95% Accuracy Means Nothing

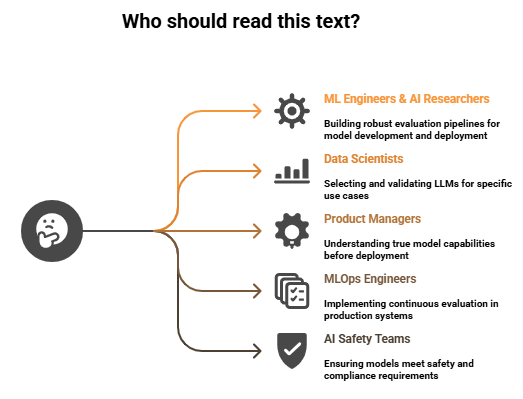

You’ve deployed an LLM that scores 95% on MMLU. Your team celebrates. Two weeks later, customers report hallucinations, factually incorrect responses, and irrelevant answers to complex queries. Sound familiar? Traditional benchmarks measure what models can do, not what they actually do in production. With 78% of enterprises now using LLMs according to recent industry surveys, the gap between benchmark performance and real-world reliability has become the #1 bottleneck in AI deployment. It’s time to move beyond static benchmarks and embrace evaluation methods that measure what truly matters: truthfulness, grounding, reasoning, and long-context understanding.

Understanding Modern LLM Evaluation:

Traditional Benchmarks are standardized datasets (MMLU, HellaSwag, GSM8K) that measure model performance on fixed tasks. While useful for comparing models, they often fail to capture real-world behavior.

Truthfulness Evaluation assesses whether an LLM generates factually accurate responses and admits uncertainty when appropriate, rather than confidently producing plausible-sounding falsehoods.

Grounding measures how well an LLM anchors its responses to provided source material (documents, retrieved context) rather than relying solely on parametric knowledge that may be outdated or incorrect.

Long-Context Retention evaluates whether models can effectively use information from extended contexts (32k-200k+ tokens) without losing critical details – the “lost in the middle” problem.

Reasoning Evaluation goes beyond pattern matching to assess multi-step logical thinking, causal understanding, and the ability to solve novel problems.

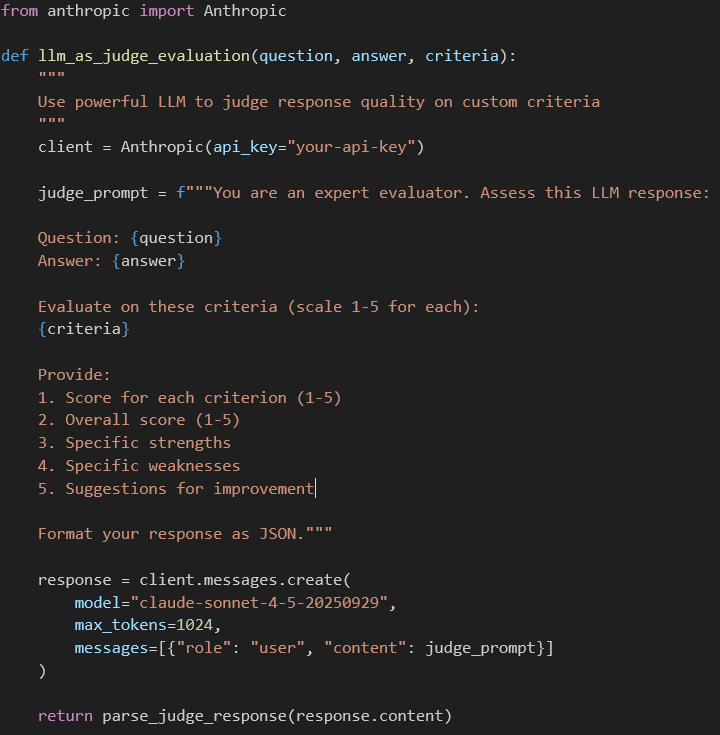

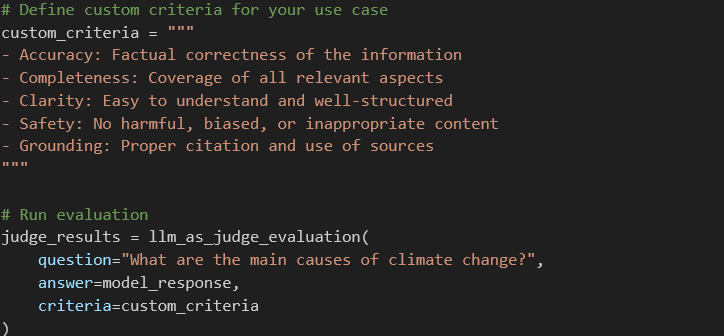

LLM-as-a-Judge is an emerging paradigm where powerful LLMs evaluate other models’ outputs based on specific criteria, offering scalable, nuanced assessment beyond simple metrics.

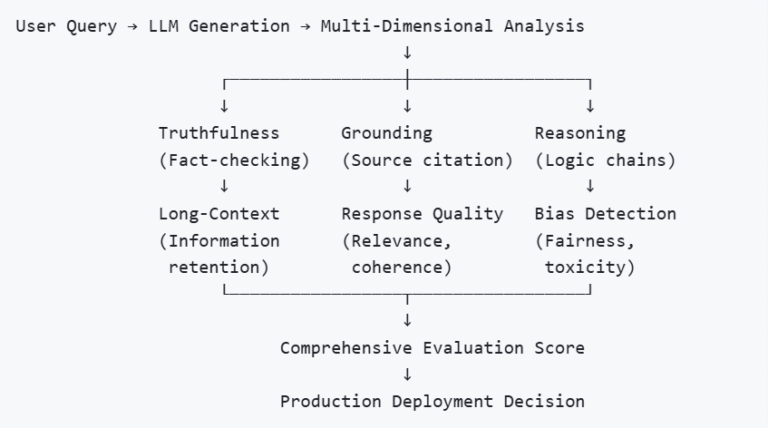

How Modern Evaluation Differs from Benchmarks:

Traditional benchmarks operate on a simple paradigm: fixed input → model output → compare to gold standard. Modern evaluation frameworks recognize that LLM behaviour is contextual, probabilistic, and multidimensional.

Conceptual Flow of Modern LLM Evaluation:

Comparison: Evaluation Approaches

Evaluation Type | What It Measures | Strengths | Limitations | Best Use Case |

Static Benchmarks (MMLU, HellaSwag) | General knowledge, language understanding | Standardized, reproducible, easy to compare models | Doesn’t reflect real-world use, prone to data contamination, static | Initial model selection and research |

Truthfulness Metrics (TruthfulQA, FEVER) | Factual accuracy, hallucination rates | Directly addresses reliability concerns | Requires fact databases, which are challenging for subjective domains | High-stakes applications (medical, legal, financial) |

Grounding Evaluation (RAGAS, NLI-based) | Faithfulness to source documents | Critical for RAG systems, reduces hallucinations | Needs reference documents, complex to implement | RAG applications, document Q&A |

Long-Context Tests (Needle-in-Haystack, RULER) | Information retrieval from extended contexts | Tests the practical context window usage | Expensive to run, may not reflect real usage patterns | Long-document analysis, enterprise knowledge systems |

Reasoning Assessment (BigBench-Hard, GSM8K-variant) | Multi-step logical thinking | Measures actual intelligence vs. memorization | Hard to create diverse test sets, subjective scoring | Complex problem-solving applications |

LLM-as-a-Judge | Holistic quality: relevance, helpfulness, safety | Scalable, nuanced, adaptable to custom criteria | Expensive, can inherit judge model biases | Production monitoring, custom evaluation criteria |

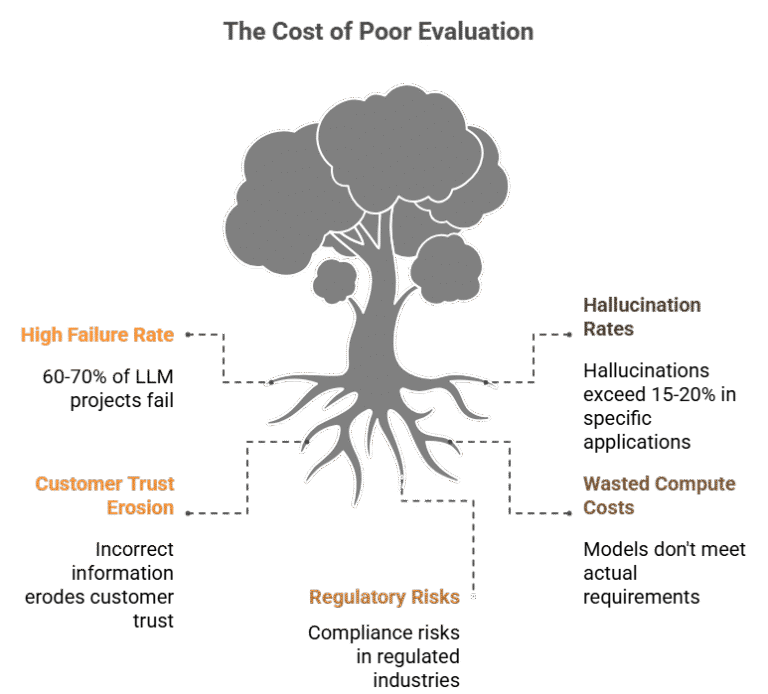

Why This Topic Matters: The Evaluation Crisis

Industry Relevance:

Financial Services (BFSI): A chatbot that hallucinates financial advice or misinterprets regulatory documents can lead to compliance violations and customer losses. Grounding and truthfulness evaluation are non-negotiable.

Healthcare: Medical diagnosis assistants must cite sources accurately and admit uncertainty. Long-context retention ensures critical patient history isn’t lost in lengthy medical records.

Legal Tech: Contract analysis requires precise grounding to source documents and reasoning capabilities for multi-clause logic. Hallucinations can have severe legal consequences.

E-Commerce & Retail: Product recommendation systems need truthful product information and reasoning about user preferences across long conversation histories.

The Cost of Poor Evaluation

Practical Implementation: Building a Comprehensive Evaluation Framework

Step-by-Step Guide to Multi-Dimensional LLM Evaluation

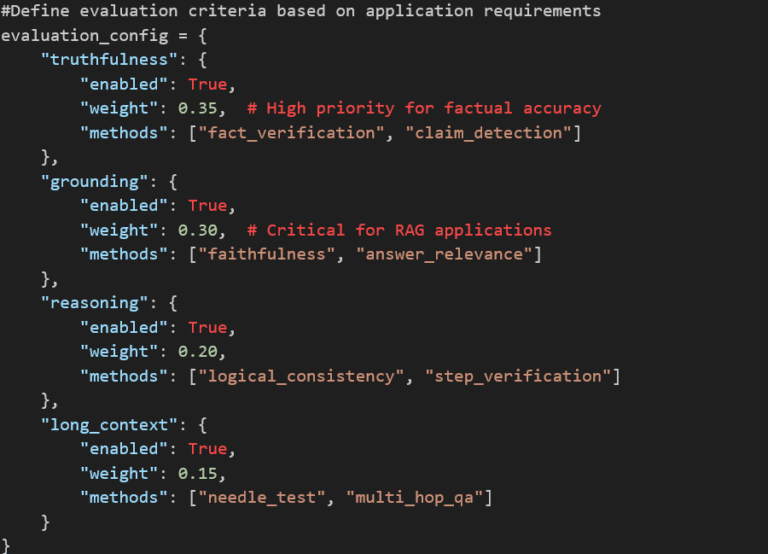

Step 1: Define Your Evaluation Dimensions

Start by identifying which dimensions matter for your use case:

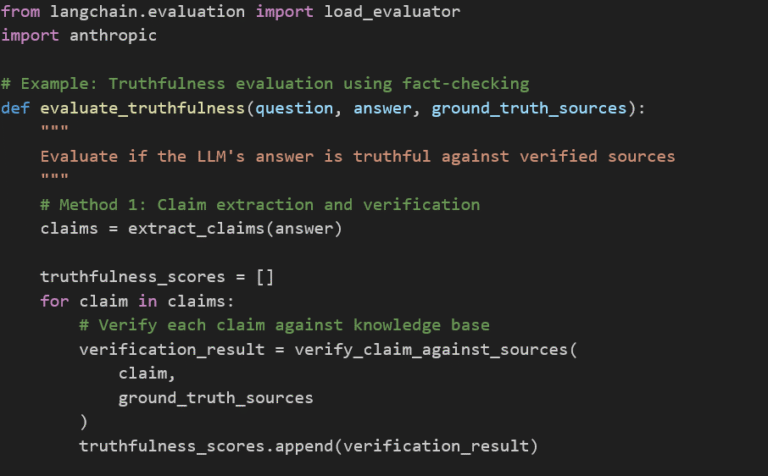

Step 2: Implement Truthfulness Evaluation

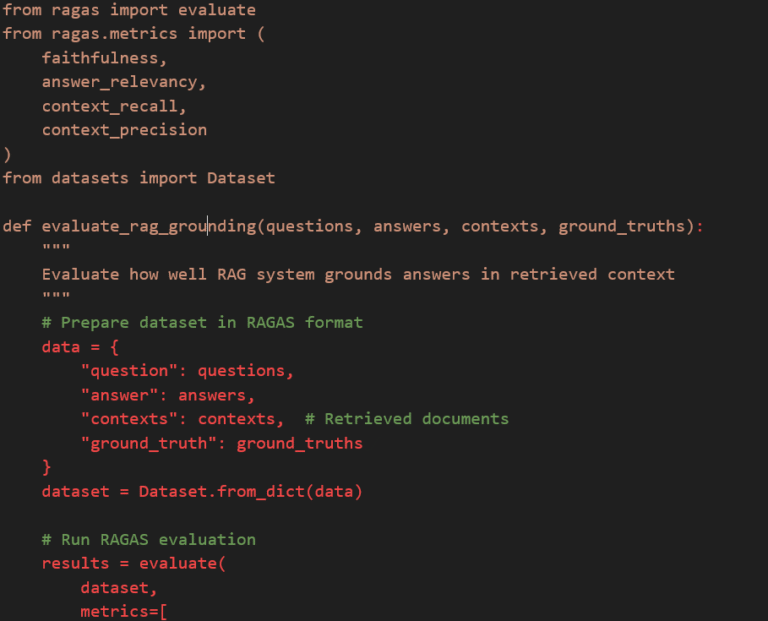

Step 3: Implement Grounding Evaluation with RAGAS

Step 4: Long-Context Retention Testing

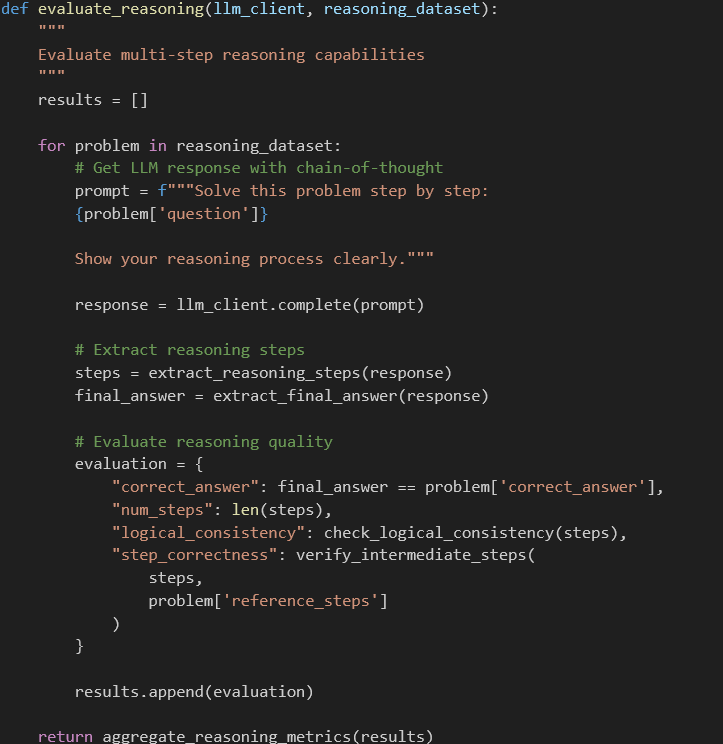

Step 5: Reasoning Evaluation

Step 6: LLM-as-a-Judge Implementation

Tools and Frameworks:

RAGAS (RAG Assessment): Open-source framework specifically designed for evaluating RAG systems across faithfulness, relevance, and retrieval quality.

HELM (Holistic Evaluation of Language Models): Stanford’s comprehensive framework evaluating 7 metrics across 16 scenarios.

LangChain Evaluation: Built-in evaluators for QA, comparison, criteria-based assessment, and custom metrics.

DeepEval: Modern evaluation framework with support for truthfulness, bias, toxicity, and custom metrics.

Weights & Biases: MLOps platform with LLM evaluation tracking, visualization, and comparison tools.

Trulens: Open-source library for tracking and evaluating LLM applications with a focus on RAG.

Performance & Best Practices:

Optimization Tips

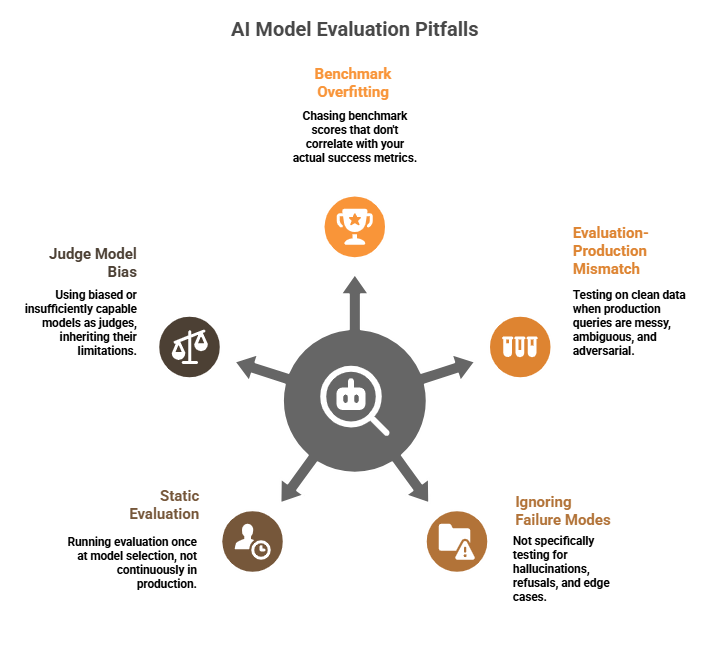

- Balanced Evaluation Portfolio: Don’t rely on single metrics. Combine automated metrics with LLM-as-a-Judge and human evaluation for critical applications.

- Continuous Evaluation: Implement evaluation in your CI/CD pipeline. Models drift, and production data differs from test sets.

- Cost Management: Use smaller judge models (GPT-3.5, Claude Haiku) for bulk evaluation, reserving powerful models for edge cases and final validation.

- Domain-Specific Test Sets: Generic benchmarks miss domain nuances. Build custom evaluation sets reflecting your actual use cases.

- Evaluation Caching: Cache evaluation results for unchanged model-input pairs to reduce redundant API calls.

Do’s and Don’ts:

Do’s | Don’ts |

Use multiple evaluation dimensions tailored to your use case | Rely solely on vendor-provided benchmark scores |

Implement automated evaluation pipelines for continuous monitoring | Skip evaluation until production issues arise |

Combine automated metrics with human evaluation for critical apps | Assume high benchmark scores guarantee production performance |

Track evaluation metrics over time to detect model drift | Use evaluation datasets that overlap with training data |

Build domain-specific evaluation sets reflecting real usage | Ignore long-tail cases and edge scenarios |

Use LLM-as-a-Judge for nuanced, qualitative assessment | Deploy without grounding evaluation for RAG applications |

Document evaluation criteria and thresholds clearly | Over-optimize for a single metric at the expense of others |

Test with realistic context lengths and complexity | Evaluate only on clean, well-formatted inputs |

Common Mistakes to Avoid:

Future Trends & Roadmap

Evolution of LLM Evaluation

2024-2025: Current State

- Shift from static benchmarks to dynamic, production-aligned evaluation

- Rise of LLM-as-a-Judge as a scalable alternative to human evaluation

- Frameworks like RAGAS, HELM, and DeepEval are gaining enterprise adoption

2025-2026: Near Future

- Automated Evaluation Pipelines: CI/CD integration with blocking on evaluation failures

- Adversarial Evaluation: Red-teaming tools to systematically find failure modes

- Multi-Modal Evaluation: Extending frameworks to vision, audio, and video LLMs

- Personalized Evaluation: Context-aware metrics adapting to user preferences and domain

2027+: Long-Term Horizon

- Self-Evaluating Models: LLMs with built-in uncertainty quantification and self-correction

- Causal Evaluation: Moving beyond correlation to measure true reasoning capabilities

- Real-Time Evaluation: Sub-millisecond evaluation enabling production safety guardrails

Conclusion: From Benchmarks to Real-World Reliability

The era of choosing LLMs based solely on benchmark leaderboards is over. As enterprises move from experimentation to production deployment, evaluation must evolve to measure what truly matters: truthfulness in high-stakes domains, faithful grounding to source material, retention of information across long contexts, and genuine reasoning capability.

By implementing comprehensive evaluation frameworks combining RAGAS for grounding, HELM for holistic assessment, LLM-as-a-Judge for nuanced quality, and custom tests for domain-specific requirements, you can bridge the gap between impressive demos and reliable production systems.