Microsoft Fabric: An Overview

The world of data analytics is evolving faster than ever. As organizations deal with growing volumes of structured and unstructured data, the need for a unified, scalable, and intelligent analytics platform has become critical. Microsoft Fabric is response to this challenge, a comprehensive, end-to-end analytics solution that integrates data engineering, data science, real-time analytics, and […]

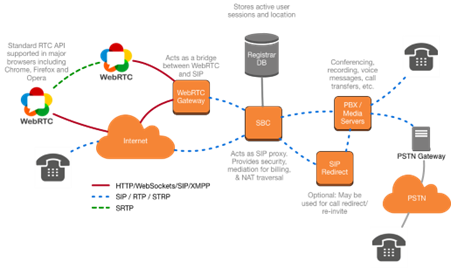

Understanding WebRTC: The Technology Powering Real-Time Communication on the Web

In today’s hyperconnected world, seamless real-time communication has become a fundamental part of our daily digital experiences. Whether we are participating in a video conference, playing an online multiplayer game, chatting with customer support, or collaborating remotely, the ability to exchange data instantly is essential. At the core of this real-time revolution lies WebRTC (Web […]

Power BI in a Lakehouse World — Microsoft Fabric DirectLake Deep Dive

Modern analytics isn’t just about dashboards — it’s about speed, volume, and freshness.With more data flowing in (sensor data, IoT, streaming logs, huge transactional systems), organisations want: fast interactive visuals up-to-date data (near real-time) scalable architecture without massive refresh times Historically, in Microsoft Power BI, you’ve had trade-offs: Import Mode → blazing fast, but data […]

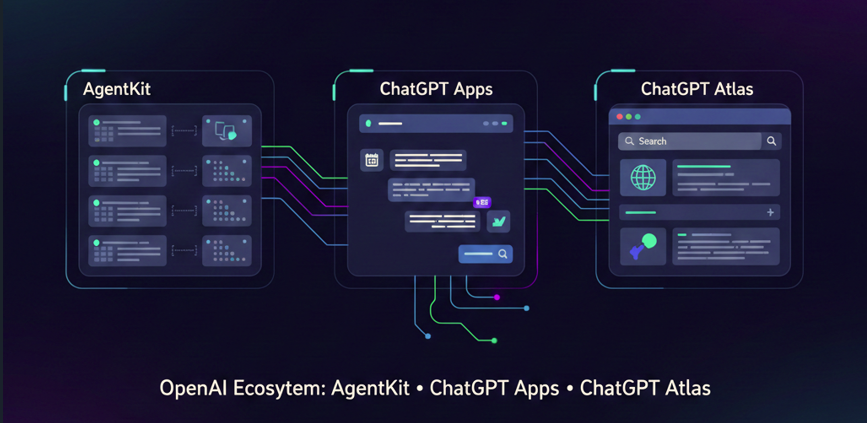

OpenAI’s Triple Revolution: AgentKit, ChatGPT Apps, and Atlas Browser Transform AI Development

What if you could build enterprise-grade AI agents in under eight minutes instead of eight months? At OpenAI’s DevDay 2025, Sam Altman made this a reality live on stage. OpenAI CEO Christina Huang built an entire AI workflow and two AI agents in under eight minutes during the keynote. But that was just the opening […]

Building Your First Workflow in Databricks: A Step-by-StepGuide to Parameters, Triggers, and More

Have you ever wanted the data pipelines to function like clockwork, without any manual interventions, and with full control over when and how they run? With Databricks Workflows, we can seamlessly combine data engineering, analytics, and machine learning in one place, with orchestration tightly integrated into the Lakehouse. Unlike ADF, they eliminate the need for […]

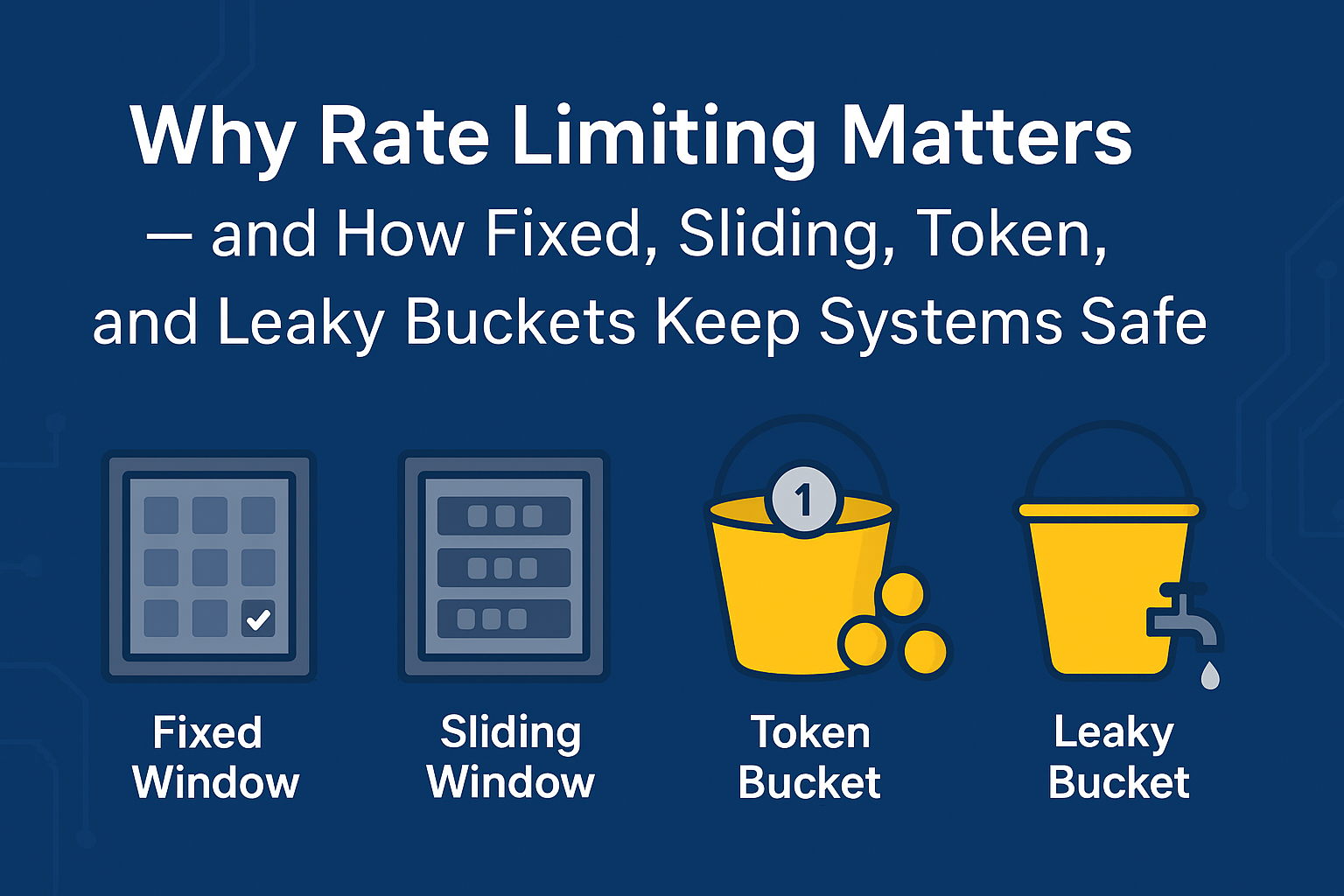

Why Rate Limiting Matters – and How Fixed, Sliding, Token, and Leaky Buckets Keep Systems Safe

In today’s digital world, where thousands of users access the same application or website at the same time, managing traffic is a big challenge. Imagine what would happen if every user tried to send hundreds of requests per second — your server might slow down or even crash! That’s where Rate Limiting comes in. It’s […]

Databricks AI/BI Dashboards: Transforming Data into Intelligent Insights

In a world driven by data, organizations require more than static reports — they need real-time, intelligent visual analytics that guide decisions dynamically. Databricks AI/BI (Artificial Intelligence for Business Intelligence) Dashboards redefine this potential by combining AI-driven insights, intuitive interactivity, and scalable architecture on a single platform. AI/BI dashboards unify data modeling, visualization, and AI […]

Top 5 Mistakes to Avoid When Working with Date Tables in Power BI (With Real-Time Scenarios)

Working with dates is at the heart of most business reporting and analytics. Whether you’re tracking sales over time, comparing month-over-month performance, or calculating year-to-date metrics, you’re dealing with time intelligence—and for that, a robust and well-structured Date Table is essential. However, many Power BI developers (even experienced ones) unintentionally make mistakes when implementing or […]

Automated Schema Drift Detection in Databricks A Scalable and Configurable Approach

In modern data engineering, complex pipelines constantly process vast amounts of information from diverse sources. But one silent disruptor can destabilize everything—schema drift. When source systems unexpectedly add, rename, or change fields, these unnoticed shifts can break pipelines, corrupt models, and produce inaccurate reports. This blog post simplifies schema drift and shows how to detect […]

From Silent Apps to Smart Alerts – Making Push Notifications in Flutter Easy with Firebase

In today’s mobile-driven world, user engagement and retention are major challenges. No matter how well an app is designed, users tend to lose interest if they aren’t reminded of updates, offers, or important actions. For example: A food delivery app might need to alert users when their order is picked up or delivered. A fitness […]