- This document serves as a practical guide to understanding and using LangChain Agents in real-world applications. It explores what LangChain Agents are, how they work, and the challenges associated with deploying them in production. You’ll also find practical design patterns, best practices, and case studies to help you build more stable and intelligent agent-based systems.

- Building intelligent applications with language models requires more than just a prompt — and LangChain Agents provide a powerful abstraction for tool-using LLMs. However, their dynamic nature introduces new risks in production environments. This guide offers a clear, hands-on explanation of LangChain Agents, their internal reasoning flow, stability considerations, and how to make them reliable at scale.

LangChain Agents: Brilliant but Brittle?

- LangChain Agents are a bold new era of AI, where models think, decide, and act independently.

But despite their flexibility, many teams are discovering that agents are not quite production-ready. So, what makes them unstable, and is there a way to fix it?

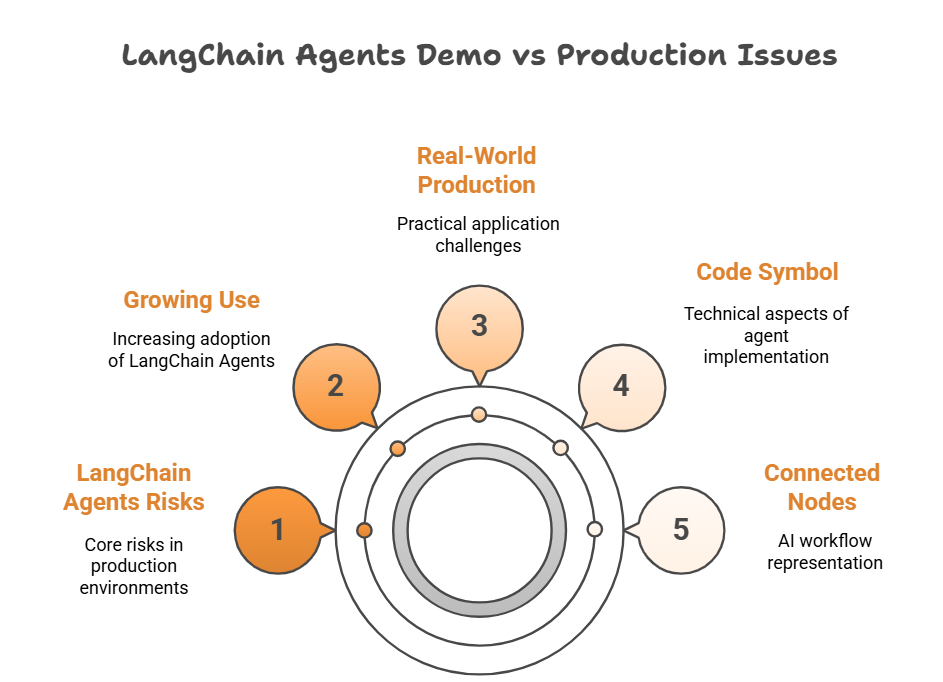

Why This Topic Matters?

- With the rise of RAG, LLM-powered tools, and smart assistants, agents are now at the forefront.

- Many assume LangChain Agents are plug-and-play for production — they aren’t.

- Instability leads to:

- Failures in enterprise deployments

- High cost overruns

- Unexpected user-facing bugs

- Understanding where and why they fail is crucial for anyone working in AI systems today.

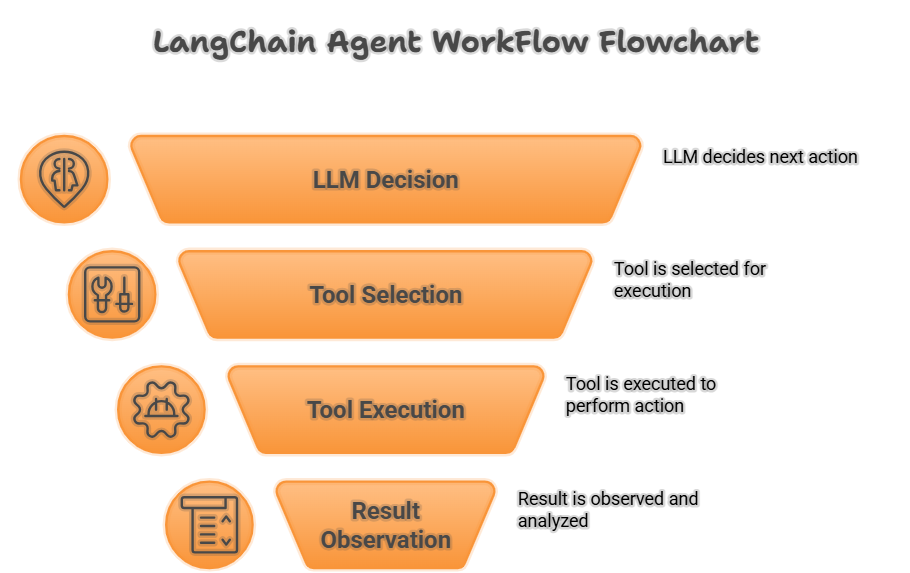

How LangChain Agents Think: The Core Concepts?

- LangChain Agents = LLMs + Tools + a Reasoning Loop

- Unlike static chains, agents operate dynamically by:

- Thinking – evaluating what action to take next

- Acting – executing a chosen tool or function

- Observing – interpreting the result before deciding the next move

- Repeating this loop until the task is complete

- This design enables advanced capabilities like:

- Multi-step problem solving

- Accessing real-time or external information

- Adapting to evolving inputs or context

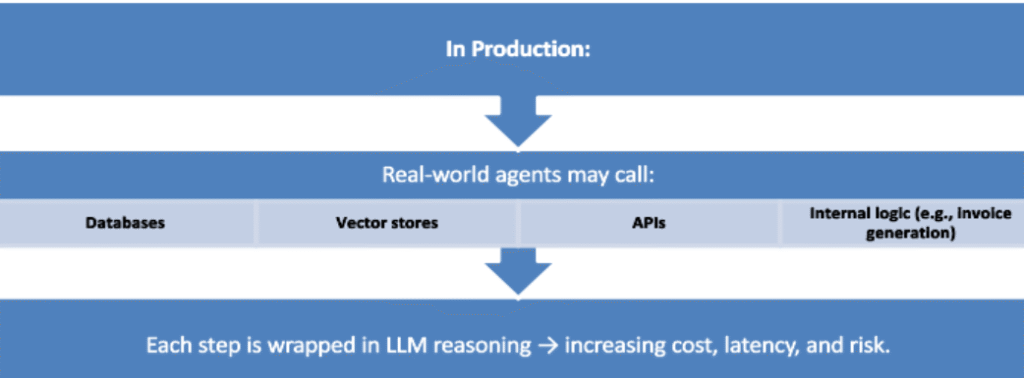

However, this flexibility introduces greater complexity — and can lead to unpredictable behavior in production environments.

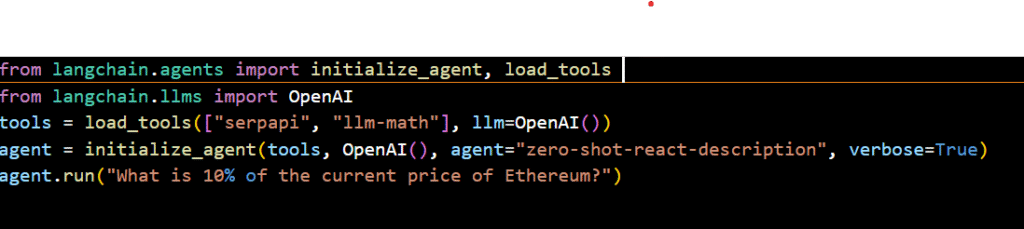

From Concept to Code: LangChain Agent Implementation

Optimizing LangChain Agents: Performance & Best Practices

Instability Challenges | Why it happens |

Hallucination | LLM invents wrong tool names or inputs |

Latency | Multiple LLM calls + tool steps |

Cost | Each reasoning step = tokens |

Debuggability | Agents may follow different paths every time |

Error Handing | Poor defaults (no retries, validation) |

Security | Prompt injection vulnerabilities |

Best Practices:

- Use LangGraph for structure.

- Add input/output validation around tools.

- Limit toolset exposure to the agent.

- Implement timeouts and retry limits.

- Add logging + callbacks to observe agent step

LangChain Agents in the Real World: Industry Use Cases

Case: Building a Customer Support Agent

Goal: Allow the agent to look up order info, track shipment, and respond to queries.

Observed Issues:

- The agent misinterpreted “refund” as “cancel” and called the wrong API.

- Latency ranged from 6s to 35s, depending on the task.

- Tool inputs sometimes malformed — caused hard failures.

Solution:

- Switched to LangGraph with manual control flow.

- Added schema checks using Pydantic.

- Reduced agent role to “ask questions, fetch info” only, no sensitive writes.

What’s Next for LangChain Agents: Trends & Roadmap

Hybrid Models:

- Agents combined with deterministic pipelines (LangGraph) will become the standard for balanced control and flexibility.

Security Layers:

- Advanced prompt firewalls and strict tool access restrictions will mature, enhancing agent robustness against vulnerabilities.

Cost-Aware Agents:

- Development of token budgeting and usage optimization techniques will be crucial for managing operational expenses.

Agent Observability Tools:

- Better debugging, monitoring, and analytics layers are emerging to provide deeper insights into agent behavior.

Open-Source Alternatives:

- Frameworks like MetaGPT and AutoGen offer more structured alternatives, fostering innovation and community-driven solutions.

Conclusion

LangChain Agents bring a powerful abstraction to LLM-based applications by enabling dynamic decision-making and tool usage. Their ability to reason, plan, and act makes them ideal for building intelligent, context-aware systems.

However, with this power comes complexity. Deploying LangChain Agents in production environments requires careful consideration of stability, error handling, cost control, and observability.

As the ecosystem matures—with frameworks like LangGraph, evolving tooling best practices, and fine-tuned orchestration patterns—we’re getting closer to making production-grade agentic systems a reality.

In the end, LangChain Agents aren’t just a tool—they’re a step toward the future of autonomous AI applications. But as always in AI: design thoughtfully, deploy cautiously, and iterate relentlessly.