Imagine deploying an AI agent for customer support, only to discover it’s confidently providing incorrect product information to hundreds of customers daily. Or a financial AI agent making investment recommendations based on hallucinated market data. Studies show that even state-of-the-art language models hallucinate in 15-30% of responses, and when equipped with tools and autonomy, the stakes become exponentially higher.

As organizations rush to deploy AI agents capable of real-world actions, from booking appointments to executing financial transactions, robust safety measures have never been more critical. This isn’t just about accuracy; it’s about building systems businesses can trust with their most important operations.

Current Implementation Challenges

Compound Error Propagation: When agents hallucinate information and then use tools to act on false data, errors cascade across multiple systems. A single incorrect “fact” can trigger a chain of inappropriate actions.

Complexity Management: Handling multi-agent interactions while maintaining system-wide consistency and managing policy updates across distributed environments.

Scale Amplification: Unlike human errors affecting one interaction, agent errors can instantly impact thousands of users or transactions.

Trust Calibration: Users struggle to appropriately calibrate trust in AI agents, either over-relying on potentially flawed outputs or unnecessarily limiting useful capabilities.

The Guardrails Solution Framework

Guardrails are safety mechanisms within AI systems that act as rules, constraints, and guidelines, ensuring agents operate within predefined boundaries, preventing harm, bias, or misuse.

The Four Pillars of AI Guardrails

Appropriateness Guardrails: Filter toxic, harmful, biased, or stereotypical content before reaching users. Example: A customer service agent attempting culturally insensitive language would be blocked and redirected to neutral, professional alternatives.

Hallucination Guardrails: Ensure content doesn’t contain factually wrong or misleading information, critical in tool-augmented systems. Example: An agent claiming “Python was invented in 1995” would trigger fact-checking against reliable sources, correcting it to “1991.”

Regulatory-Compliance Guardrails: Validate content meets industry-specific regulatory requirements. Example: A healthcare agent suggesting medical advice would be flagged and routed to licensed professionals.

Alignment Guardrails: Ensure content aligns with user expectations and doesn’t drift from intended purpose. Example: A scheduling agent offering investment advice would be redirected to core calendar management.

Hands-on Guide: Implementing a Simple Guardrail

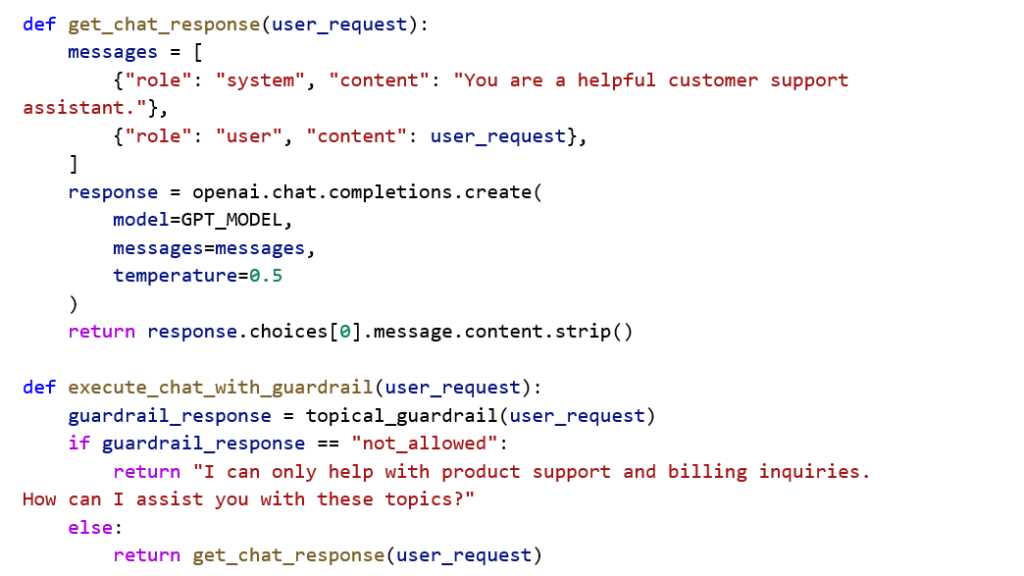

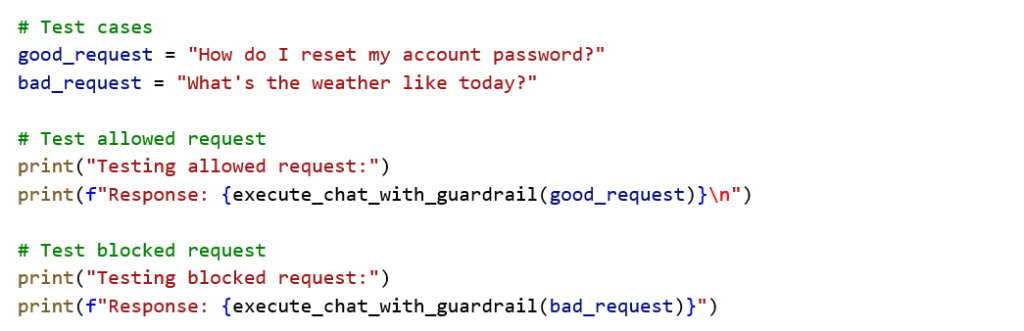

Let’s create a customer support agent who only discusses product support and billing inquiries.

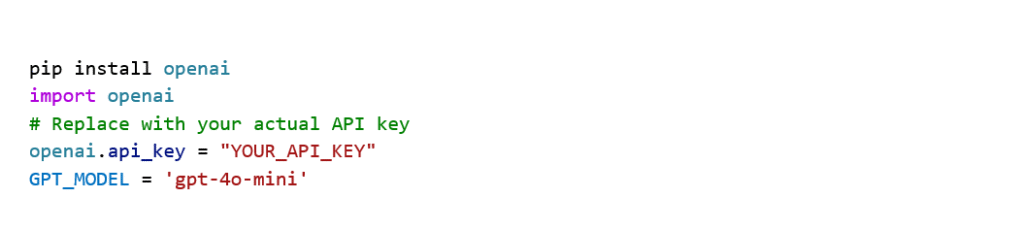

Step 1: Install Dependencies and set up the environment

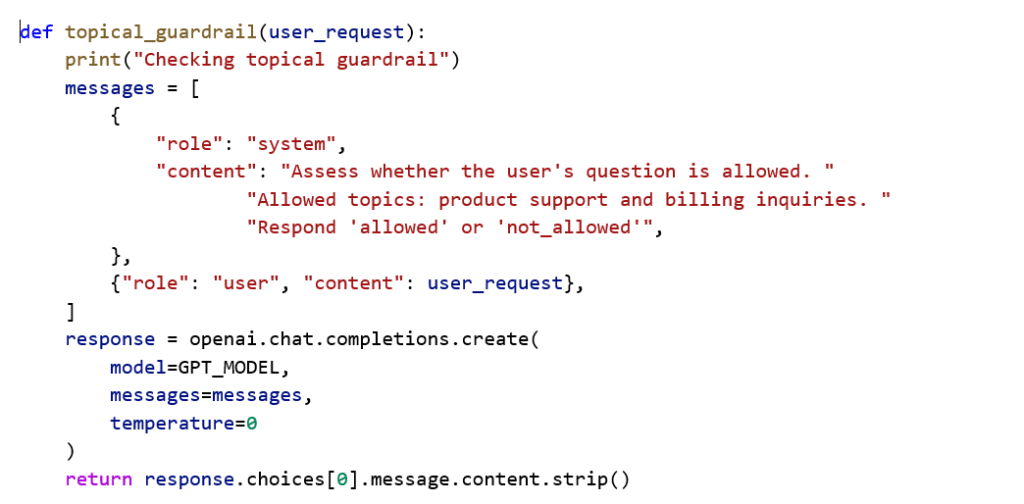

Step 2: Building Guardrail Logic

Step 3: Integration

Step 4: Testing

Key Tips: Use temperature=0 for consistent guardrail classification, clear system prompts for accurate classifications, and simple validation logic for reliability.

Types of Guardrails in Multi-Agent Systems

Agent-Level Guardrails

Safety Guardrails: Prevent harm or dangerous activities, implement action constraints, include access controls, and perform safety checks. Example: Preventing a document management agent from deleting files without explicit user confirmation and backup verification.

Security Guardrails: Protect against external threats, prevent unauthorized access and data breaches, and guard against malicious attacks. Example: Blocking agents from accessing APIs outside the authorized scope, even if users request it.

Ethical Guardrails: Ensure compliance with ethical principles, address bias, fairness, transparency, and maintain accountability. Example: A hiring agent showing demographic preference would be flagged and retrained for fair candidate evaluation.

Performance Guardrails: Monitor resource usage, optimize workflows, and prevent runaway loops consuming excessive computational resources. Example: Stopping analysis agents stuck in infinite loops, processing the same dataset repeatedly.

Workflow-Level Guardrails

Data Validation: Ensure data validity and consistency, enforce predefined formats, and prevent error propagation between agent communications. Example: Rejecting data transfers with inconsistent date formats that could cause scheduling conflicts.

Access Control: Regulate resource and information access, maintain the least privilege principle, and protect sensitive information. Example: Denying marketing agents access to customer financial data, redirecting to anonymized demographic information.

Error Handling: Detect and manage workflow errors, implement recovery mechanisms, and maintain workflow integrity. Example: When email agents fail, automatically trigger backup SMS notifications while logging failures.

Managing Hallucinations in Practice: Detection → Verification → Enforcement

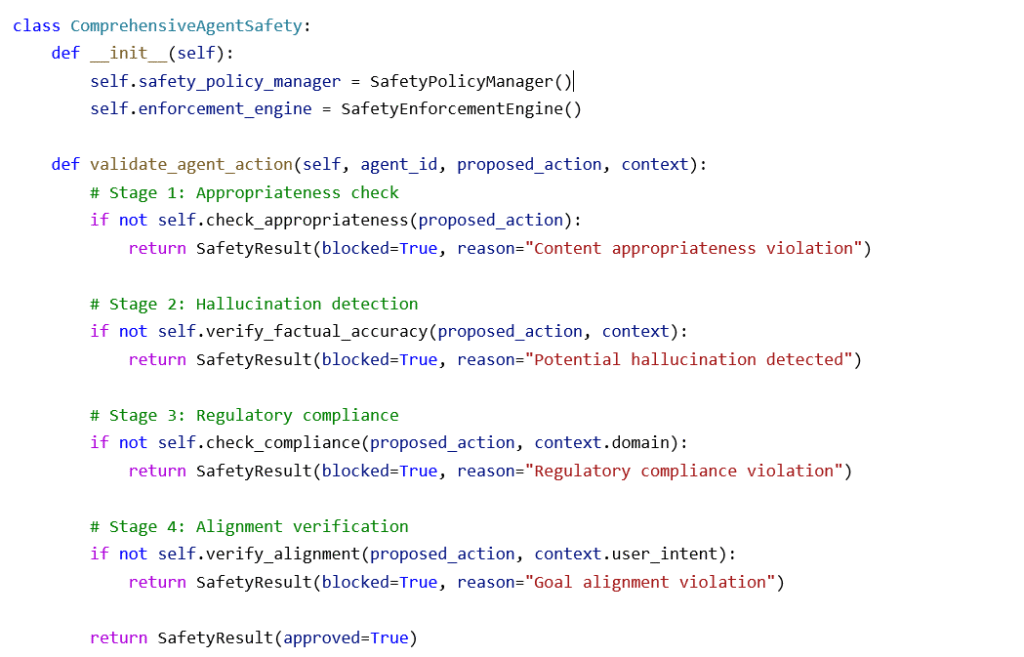

This section provides a structured approach to building comprehensive safety systems that scale with your AI agent deployment, ensuring reliable operation across multiple validation layers.

Here’s how to build production-ready safety systems for your AI agents:

Multi-Layer Validation

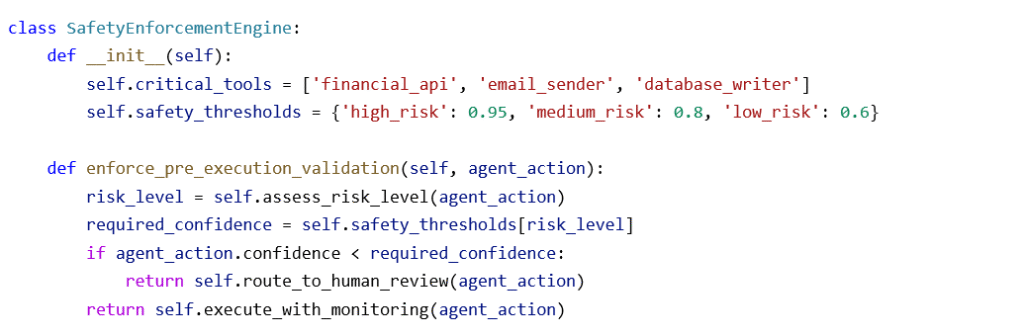

Runtime Safety Enforcement

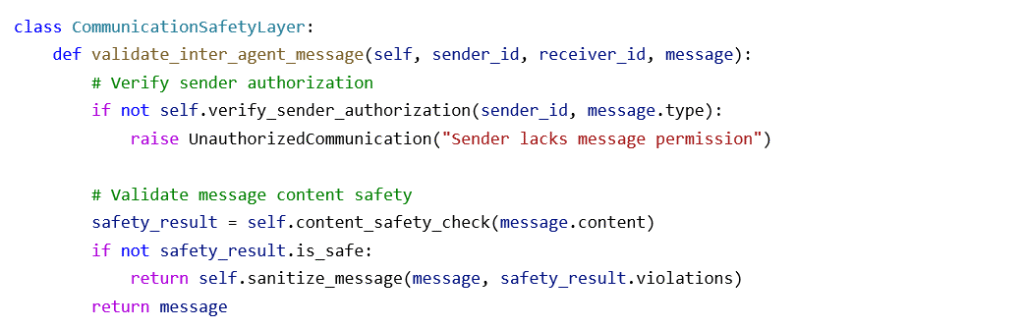

Inter-Agent Communication Safety

Performance Optimization and Best Practices

How Guardrails Enhance System Reliability

Error Prevention: Act as the first defense against AI mistakes, preventing error propagation through interconnected systems.

Trust Building: Consistently filter inappropriate, inaccurate, or misaligned content, building user confidence for broader adoption.

Compliance Assurance: Automated regulatory checking enables operation in regulated industries without constant human oversight.

Optimization Strategies

- Parallel Validation: Execute multiple checks concurrently to minimize response time impact

- Risk-Adaptive Thresholds: Dynamically adjust confidence requirements based on action criticality

- Intelligent Caching: Store validation results for repeated queries to reduce computational overhead

Critical Guidelines

Do’s | Don’ts |

Implement separation of concerns | Create monolithic safety systems |

Use version control for policies | Deploy without rollback capabilities |

Design redundant mechanisms | Rely on single failure points |

Test with adversarial scenarios | Assume friendly input conditions |

Plan graceful degradation | Allow unsafe fallback behaviours |

Common Implementation Pitfalls

- Policy Inconsistency: Different agents operating under incompatible safety policies

- Over-Centralization: Creating safety bottlenecks that can’t scale

- Inadequate Coordination: Failing to implement communication safety layers

Measuring Success: The Outcome Impact

Organizations implementing comprehensive guardrail frameworks achieve:

- Error Reduction: 85-95% fewer production system errors

- Regulatory Confidence: Deployment capability in sensitive industries

- Operational Scalability: Larger agent populations with maintained safety standards

Key Insight: Safety isn’t a constraint on AI capability—it’s an enabler. Organizations with robust safety measures deploy agents more confidently, scale rapidly, and operate in sensitive domains.

Success in AI agent deployment isn’t measured by model sophistication, but by safety system reliability and trustworthiness. Start building your guardrail framework today, because tomorrow’s competitive advantage belongs to those who can deploy AI agents that users trust completely.