Everyone is racing toward AGI — but no one agrees on what AGI actually is.

OpenAI says GPT-4 shows “sparks of AGI,” DeepMind insists we’re years away, and philosophers argue we can’t define intelligence without consciousness.

So how do you plan, invest, and build for a future no one can clearly describe?

What Is AGI? (Or What People Think It Is)

Artificial General Intelligence refers to systems that match or exceed human cognitive abilities across diverse tasks — not just narrow skills like playing chess or coding.

But here’s the issue: the world doesn’t agree on what “general” means.

The Four Competing Definitions

Definition | Key Criteria | Who Uses It |

Task-Based | Performs any intellectual task humans can | OpenAI, DeepMind |

Economic | Automates the majority of valuable work | Economists, VCs |

Cognitive | Exhibits reasoning + consciousness | Cognitive scientists |

Adaptability | Learning new tasks without retraining | ML researchers |

Why This Topic Matters

Who Should Read This?

- CTOs & Tech Leaders

- Policymakers

- ML Engineers & Research Teams

- Investors betting on long-term AI outcomes

Why It’s Relevant Across Industries

BFSI, retail, healthcare, and manufacturing — every industry is planning for automation, AI copilots, or workforce transformation.

But strategies depend entirely on which AGI definition you assume.

Current Challenges Without Clarity

- Conflicting expectations between engineering, leadership, and investors

- Regulations are being drafted with no shared definition

- Billions spent on divergent R&D strategies

- Safety efforts are misaligned with actual capability risks

“We’re conducting a $100 billion experiment with no agreed-upon success criteria.”

Practical Implementation (What AGI Means Today — And What It Doesn’t)

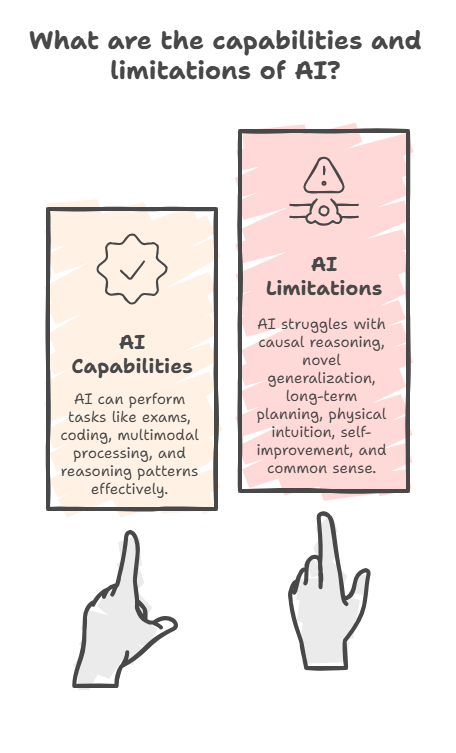

Even though we can’t define AGI clearly, we can measure what today’s models can and cannot do.

What Today’s AI Systems Can Do

- Pass professional exams (law, medicine, engineering)

- Generate complex code

- Work across text, images, and audio (multimodal capabilities)

- Perform sophisticated pattern-based reasoning

What They Cannot Do

- Generalize to completely novel situations

- Perform reliable causal reasoning

- Execute long-term coherent planning

- Understand or operate in physical environments

- Self-improve autonomously

- Maintain consistent common sense

The Practical Gap

Current AI = pattern recognizer

Humans = flexible reasoners

That gap is the AGI problem.

Performance & Best Practices (How to Navigate AGI in Business Today)

Do’s & Don’ts for Organizations Working With “Pre-AGI” AI

Do’s | Don’ts |

Define your AGI framework explicitly | Assume everyone means the same thing |

Use measurable capability milestones | Treat AGI as binary (achieved/not achieved) |

Separate marketing from research | Overhype incremental progress |

Adopt tiered capability levels | Ignore safety due to ambiguity |

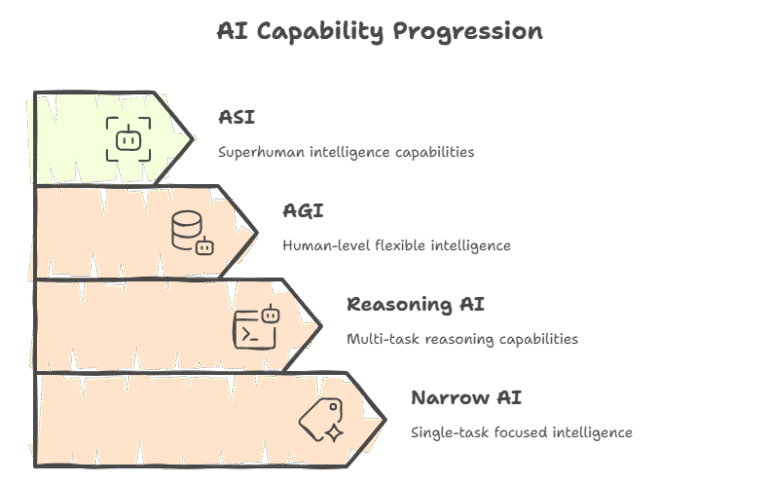

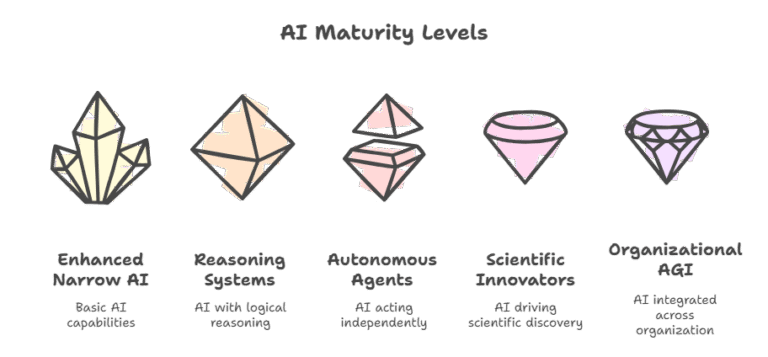

The Levels Approach (A Better Framework)

- Level 1: Enhanced Narrow AI (current systems)

- Level 2: Reasoning Systems (emerging)

- Level 3: Autonomous AI Agents

- Level 4: Scientific Innovators

- Level 5: Organizational AGI Entities

This avoids premature claims while allowing measurable progress.

Industry Use Case / Real-World Application

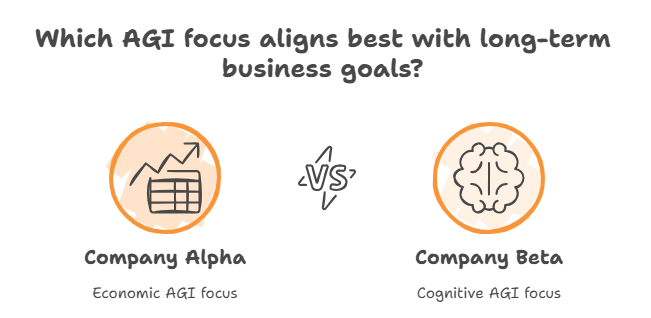

Two Companies, Two AGI Definitions — Two Completely Different Outcomes

Company Alpha: “AGI = Economic Automation”

Strategy

- Deploys GPT-like systems across processes

- Customer service automation (60%)

- AI coding assistants

- Legal document automation

Short-Term Outcome (2024)

Huge ROI, faster delivery, strong competitive advantage.

Long-Term Risk (2030)

If AGI requires robotics + physical intelligence, Alpha gets leapfrogged by competitors investing in embodied AI.

Company Beta: “AGI = Human-Level Cognition”

Strategy

- Heavy investment in fundamental research

- Building embodied AI prototypes

- Slower commercialization

Short-Term Outcome (2024)

Low revenue impact, investor pressure.

Long-Term Potential (2030)

If AGI requires deep reasoning + physical intelligence, Beta becomes a first mover.

Insight:

Success depends not on who is “right” today —

But on which AGI definition becomes reality?

Future Trends & Roadmap

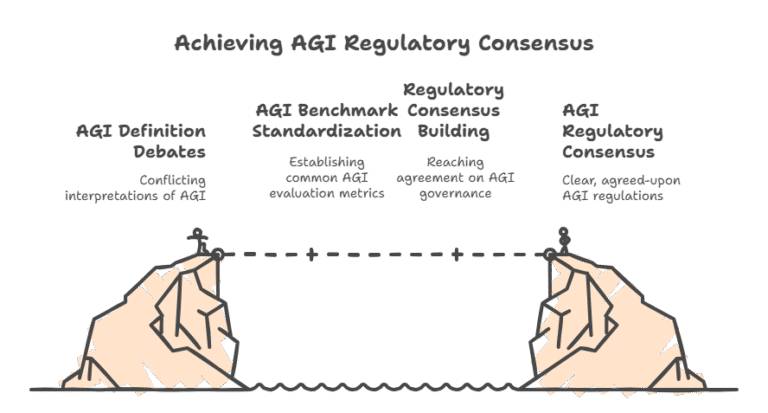

What’s Coming (2024–2030)

2024–2025:

- Heated debates continue

- Tiered capability frameworks gain adoption

2026–2028:

- Standard AGI benchmarks emerge (safety + capability)

- Policy frameworks begin adopting unified definitions

2028–2030:

- Global regulatory consensus forms

- Industry begins aligning around measurable AGI thresholds

Long-Term (Beyond 2030)

Either AGI arrives and forces definition retroactively —

Or humanity debates it indefinitely.

Emerging Standardization Efforts

- AGI Safety Benchmark Initiative

- EU AI Act’s general-purpose AI guidelines

- Joint safety coalitions from OpenAI, Anthropic, Google, and Meta

Conclusion: Finding Clarity in Complexity

AGI is both closer and further than headlines suggest:

- Closer to economic automation

- Further, in true general, human-level reasoning

- Entirely dependent on which definition you use

The path forward requires:

- Humility — We may be describing different phenomena

- Precision — Focus on capabilities, not vague labels

- Flexibility — Accept multiple workable frameworks

- Urgency — Align definitions for governance and safety

Maybe the right question isn’t “When will AGI arrive?”

But rather:

“Which aspects of general intelligence will we achieve first — and how do we govern them responsibly?”

The finish line is blurry, but the race is real.

The sooner we align on the direction, the better we can build a beneficial future.