The choice of a Machine Learning platform is one of the most critical decisions for any data-driven organization in 2025. With ML models moving from experimental notebooks to high-scale production systems, the underlying platform must provide a unified, governed, and highly scalable environment.

This deep dive compares three of the most powerful contenders: Databricks ML, Azure Machine Learning, and Google Vertex AI. Each offers a distinct philosophy, from open-source lakehouse alignment to fully managed, cloud-native MLOps.

Introduction: Why These Three Platforms Matter in 2025

The modern ML landscape demands more than just training a model; it requires robust MLOps, seamless feature engineering, and enterprise-grade governance at a petabyte scale.

- Databricks ML leverages the Lakehouse Architecture, unifying data warehousing and data lake capabilities. It’s the champion of open-source standards, with MLflow at its core, making it highly portable and excellent for Big Data and Spark-native workloads.

- Azure Machine Learning (Azure ML) is Microsoft’s comprehensive, integrated platform. It’s the natural choice for organizations heavily invested in the Azure and Microsoft ecosystem (Azure DevOps, Power BI), offering a highly managed experience and deep enterprise features.

- Google Vertex AI is Google Cloud’s unified platform, built to simplify the entire ML workflow. Its focus on GenAI, cutting-edge infrastructure (TPUs), and deep integration with BigQuery and Google’s data stack makes it ideal for enterprises seeking speed and access to Google’s state-of-the-art research.

Choosing between them is less about which is “best” and more about which architecture, open-source strategy, and cloud ecosystem aligns with your enterprise goals.

Platform Breakdown: What is Each Offering?

Platform | Core Philosophy | Key Differentiator | Best Suited For |

Databricks ML | Open Lakehouse & Unification | Built on open-source standards (Delta Lake, MLflow, Spark) for data and ML, offering exceptional Big Data handling. | Spark-native workloads, Big Data ETL, cross-cloud portability, and organizations prioritizing open-source tools. |

Azure ML | Integrated Cloud Service & MLOps | A fully managed MLOps platform deeply integrated into the larger Azure ecosystem and enterprise services. | Microsoft-centric organizations, regulated industries needing deep governance, and advanced MLOps automation. |

Google Vertex AI | Unified, Serverless & GenAI | Single platform for all ML tasks, from notebooks to production, with superior integration of Google’s foundational and GenAI models. | GenAI applications, code-first development, deep BigQuery users, and leveraging Google’s specialized hardware (TPUs). |

What is Databricks ML?

Databricks ML is the Machine Learning and AI capability layer built on the Databricks Lakehouse Platform. It provides a comprehensive environment for data scientists and ML engineers, spanning from data ingestion to model deployment, anchored by open-source technologies such as Apache Spark, Delta Lake, and, most notably, MLflow for experiment tracking and model management.

What is Azure ML?

Azure Machine Learning is a cloud service designed to accelerate the building, training, and deployment of ML models. It provides a Workspace (the top-level resource) to manage all ML assets – data, compute, experiments, and deployments. It offers both Python SDK/CLI (code-first) and a Studio UI (low-code) experiences, with a strong emphasis on production-ready MLOps pipelines.

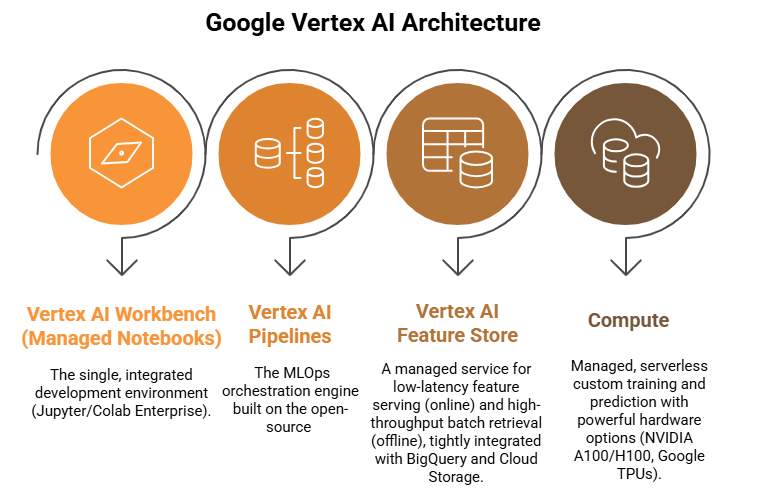

What is Google Vertex AI?

Vertex AI is Google Cloud’s unified platform for all ML development. It brings together over a dozen Google Cloud services for building ML under one roof, with a clear focus on GenAI and MLOps. Its design aims to eliminate the complexity of stitching together disparate services, offering managed tools like Vertex AI Workbench for development and Vertex AI Pipelines for orchestration.

Core Architectural Components

While the underlying cloud infrastructure differs, all three platforms provide similar logical components for the ML lifecycle.

Databricks ML Architecture: Lakehouse + MLflow + Feature Store

The Databricks ML architecture is inherently tied to the Lakehouse.

Azure ML Architecture: Workspaces + Pipelines + Endpoints

The Azure ML architecture revolves around the Workspace as the central hub.

Google Vertex AI Architecture: Pipelines + Workbench + Feature Store

Vertex AI is designed as a unified platform, replacing many older, individual GCP ML services.

Comparison of Developer Experience

Feature | Databricks ML | Azure ML | Google Vertex AI |

Primary Environment | Databricks Notebooks (Python, Scala, SQL, R) | Azure ML Compute Instances (Jupyter, VS Code Remote) | Vertex AI Workbench (Managed Notebooks, Colab Enterprise) |

SDK | Pure Python/Pyspark (MLflow API, Databricks SDK) | Python SDK v2, CLI v2, REST API | Vertex AI SDK for Python (google-cloud-aiplatform) |

Ease of Use (Setup) | Moderate: Requires cluster management skills (although serverless compute is easing this requirement). | Easy: Fully managed Workspace, but deep networking (VNet) can be complex. | Easy: Truly unified platform, “serverless” model for many components. |

Integrations | Git (via Repos), MLflow, all major cloud data stores. | Azure DevOps, GitHub, VS Code Extension, Power BI, Azure Data Factory. | BigQuery, Cloud Storage, Ray on Vertex AI, Google Cloud ecosystem. |

Low/No-Code | Delta Live Tables (Data Prep), Databricks AutoML. | Azure ML Studio Designer, AutoML, Data Labeling. | Vertex AI Studio (GenAI), AutoML. |

Data Preparation & Feature Engineering

Platform | Data Preparation Tooling | Feature Store Capability | Consistency/Lineage |

Databricks ML | Delta Live Tables (DLT) for ETL, Spark/Pandas/Koalas/SQL on the Lakehouse. | Databricks Feature Store: Unified for batch/streaming, offline (Delta) and online store, native MLflow integration. | Exceptional: Features and models are tied directly to the source data in Delta Lake via lineage tracking. |

Azure ML | Azure Data Factory, Azure Synapse Analytics, ML Pipelines Components. | Azure ML Feature Store: Managed, supports feature set definitions, integrated with Azure DBs (e.g., Cosmos DB for online serving). | Very Strong: Integration with Azure Purview for end-to-end data lineage and governance. |

Google Vertex AI | BigQuery, Dataproc Serverless (for Spark), Vertex AI Pipelines. | Vertex AI Feature Store: Highly scalable, low-latency managed service for online and offline feature serving. | Strong: Integrated with BigQuery and Data Catalog for data and feature metadata management. |

Model Training

All platforms support training with popular frameworks (TensorFlow, PyTorch, scikit-learn). The key difference is how they manage the computing and tracking.

How Training Jobs Work

- Databricks ML: Training runs on Databricks clusters (single-node or distributed Spark/Horovod/TorchDistributor). The training script is executed, and MLflow Autologging automatically captures parameters, metrics, and the model artifact. Runs are logged to the Managed MLflow Server.

- Azure ML: A Job (e.g., CommandJob, PipelineJob) is submitted via the SDK/CLI/Studio. This job defines the training script, environment (Docker/Conda), and compute target (Compute Cluster). The platform spins up the cluster, runs the job, and automatically tracks outputs.

- Google Vertex AI: Training is done via a Custom Training Job (serverless, managed cluster, or custom container) or through Vertex AI Pipelines. The platform manages the execution environment, and the training script is expected to save artifacts to Cloud Storage, with tracking managed by Vertex AI Experiments.

Strengths and Weaknesses

Platform | Strengths | Weaknesses |

Databricks ML | Best for distributed training on Big Data (Spark), unified data/ML platform, open and portable tracking via MLflow. | Requires management of Spark clusters, less focused on pure GenAI model tuning than Vertex AI. |

Azure ML | Excellent reproducibility via defined Environments and Components, highly managed compute, and strong integration with enterprise security. | Less native Big Data handling than Databricks, can be over-engineered for simple tasks. |

Google Vertex AI | True serverless training, best-in-class support for TPUs and GenAI foundation model tuning, fastest access to cutting-edge hardware. | Steeper learning curve for non-GCP users, reliance on Cloud Storage for intermediate artifacts. |

Real-World Code Snippets

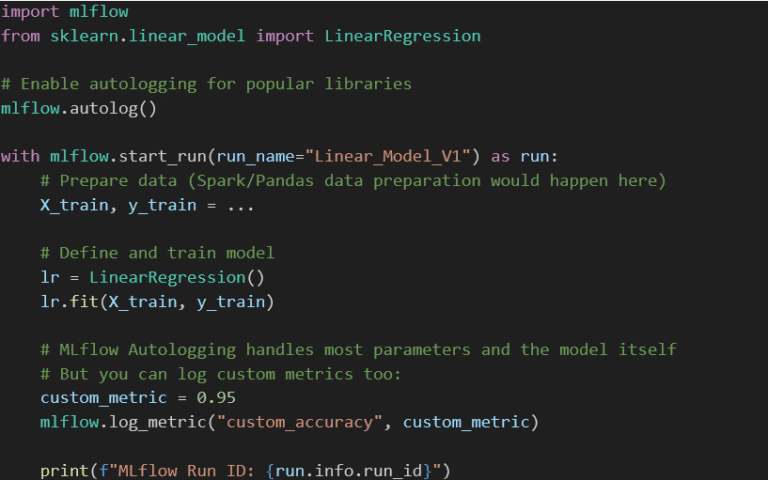

Databricks ML (MLflow Example)

This snippet shows using mlflow.log_metric to track an experiment run inside a Databricks Notebook.

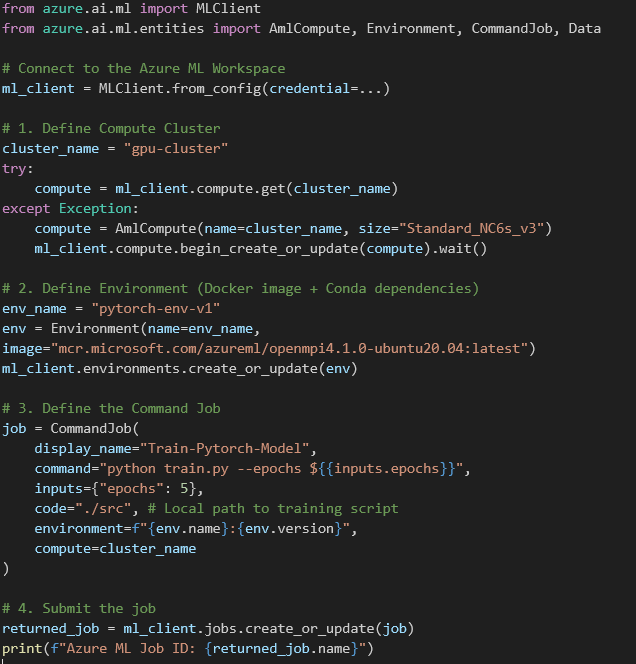

Azure ML (Python SDK v2 Job Example)

This conceptual example defines and submits a CommandJob using the Python SDK v2.

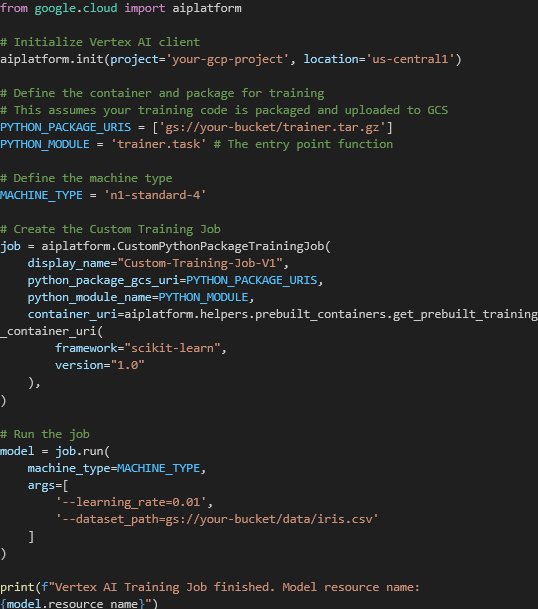

GCP Vertex AI (CustomTrainingJob Example)

This uses the Vertex AI SDK for Python to launch a managed custom training job from a Python package.

Hyperparameter Tuning Comparison

Tool Name | Platform | Core Algorithm / Approach | Key Feature |

HyperOpt | Databricks ML (Open-Source) | Tree-Parzen Estimators (TPE) – a Bayesian approach. | Open-source, highly flexible, often fastest for black-box optimization on Databricks clusters. |

HyperDrive | Azure ML | Bayesian Optimization, Random Search, Grid Search, and early termination policies (Bandit, Median Stopping). | Fully managed service, integrates with Azure ML’s rich Experiment Tracking and Compute Clusters. |

Vertex AI Vizier | Google Vertex AI | Google’s sophisticated black-box optimization service (Bayesian Optimization-based). | Best-in-class optimization intelligence runs on Google’s infrastructure, supports custom models and metrics. |

AutoML Comparison

AutoML tools aim to automate feature engineering, algorithm selection, and hyperparameter tuning.

Tool Name | Platform | Supported Tasks | Customization / Extensibility |

Databricks AutoML | Databricks ML | Classification, Regression, Forecasting. | Highly transparent. Generates notebooks with the best trial’s code (PySpark, scikit-learn), allowing for direct inspection and modification. |

Azure ML AutoML | Azure ML | Classification, Regression, Forecasting, Image/Text (Deep Learning). | Low-code UI, rich visualization, and the ability to define training exit criteria and early termination rules. |

Vertex AI AutoML | Google Vertex AI | Tabular, Image, Video, Text, Forecasting. | Focuses on leveraging Google’s highly optimized, specialized models (e.g., custom Vision and Natural Language models) for fast, high-accuracy results with minimal user input. |

MLOps / Deployment

Operationalizing a model is where the platforms show the most distinct differences in their managed services.

Platform | Serving Offering | Key Features | Blue/Green Deployment |

Databricks Model Serving | Managed Endpoints for Pyfunc/MLflow Models | Serverless, high-availability serving for MLflow models scales automatically. Tight integration with the Model Registry. | Supported via traffic shifting within the Model Serving endpoints. |

Azure ML Managed Endpoints | Managed Online Endpoints (Real-Time) & Batch Endpoints | Fully managed, auto-scaling, built-in monitoring, and strong security (VNet integration). Supports code-free MLflow model deployment. | Excellent support via traffic allocation (e.g., 90% to V1, 10% to V2) for canary releases and A/B testing. |

Vertex AI Predictions | Vertex AI Endpoints (Online/Batch) | Highly scalable, low-latency prediction service, supports optimized TensorFlow runtime, and Explainable AI out-of-the-box. | Strong support for traffic splitting and full managed rollback capability. |

Pricing Comparison (High-Level)

All platforms employ a pay-as-you-go model, but their cost drivers differ:

- Databricks ML: Primarily billed on Databricks Units (DBUs), which are a normalized measure of compute usage (and complexity). You also pay for the underlying cloud infrastructure (VMs, storage). Costs are highly dependent on cluster configuration and usage time.

- Azure ML: Billed for the Compute used (VM hours for training/serving), plus a small management fee for the Azure ML service itself. Services like AutoML and Managed Endpoints have their own rate cards. Predictable costs tied directly to the underlying VM SKUs.

- Google Vertex AI: Primarily billed for Training (per machine-hour/accelerator) and Predictions (per hour for endpoint VMs or per node hour for batch). Its serverless nature can make it very cost-effective for bursty workloads, as charges are often in 30-second increments after the first minute.

Summary: Databricks pricing is often perceived as complex due to the DBU model. Azure ML is very clear and tied to VM usage. Vertex AI is highly competitive for GenAI/TPU workloads and has low friction for small, serverless jobs.

Governance, Security, Lineage Comparison

Feature | Databricks ML | Azure ML | Google Vertex AI |

Core Governance | Unity Catalog: Centralized governance for data, ML models, notebooks, and files across the Lakehouse. | Azure Purview/Microsoft Fabric: Deeply integrated tools for data lineage, discovery, and governance across all Azure services. | Data Catalog/Security Command Center: Tools for metadata management, discovery, and central security posture. |

Security/Networking | Cluster-level ACLs, integration with Cloud IAM. Supports VNet injection/Private Link. | Managed Virtual Network (VNet) Isolation for secure, private compute and workspace access. Excellent for regulated industries. | Robust IAM, Private Endpoints, and deep integration with GCP’s zero-trust security model. |

ML Lineage | MLflow tracks parameters, code, and source data automatically from run to run. Unity Catalog extends this to the data layer. | Azure ML Pipelines provide an audited record of all steps, assets, and compute used to create a model. | Vertex AI Metadata Store tracks every step, artifact, and lineage across Pipelines and Experiments. |

Final Summary Comparison Table

Feature | Databricks ML | Azure ML | Google Vertex AI |

Strengths | 🥇 Best for Big Data/Spark. Unifies Data/ML. Open-source MLflow portability. | 🥇 Best for MLOps Automation & Enterprise Integration (Microsoft stack). Deep VNet security. | 🥇 Best for GenAI & Cutting-Edge Infrastructure (TPUs). Truly unified/serverless platform. |

Weaknesses | Cluster management overhead (though Serverless is improving). DBU pricing can be opaque. | It can be overly prescriptive and complex for simple, one-off experiments. | Steeper learning curve for non-GCP users. Less native Big Data processing than Databricks. |

Best Use Case | Organizations with petabytes of data, existing Spark workloads, or a mandate for an open-source, multi-cloud strategy. | Microsoft-centric enterprises, highly regulated industries, or those who need a comprehensive, fully managed MLOps solution. | Organizations focused on GenAI, large-scale deep learning (TPUs), or existing heavy users of BigQuery/GCP. |

Conclusion: Which Platform Fits Your Needs?

The best ML platform is the one that minimizes friction, maximizes governance, and scales with your specific data needs.

- Choose Databricks ML if: Your primary challenge is the volume and velocity of your data. If you are already running ETL on Databricks/Spark, unifying your data and ML on the Lakehouse using MLflow is the most natural and efficient path. It’s the platform of choice for Big Data ML.

- Choose Azure ML if: Your organization is deeply invested in the Microsoft ecosystem (Azure DevOps, Azure Data Factory, Active Directory). Its MLOps pipelines and managed endpoints are mature, robust, and offer the strongest enterprise governance and security features for regulated industries.

- Choose Google Vertex AI if: You are pushing the boundaries of GenAI, leveraging large language models, or require specialized hardware like TPUs. Its unified architecture simplifies MLOps, making it excellent for code-first data science teams that value a serverless, simplified user experience and deep integration with Google’s data stack.

No matter your choice, all three platforms are fully capable of hosting a world-class MLOps workflow. Your final decision should be driven by your existing cloud commitment, data architecture, and organizational comfort with open-source versus fully managed services.