In enterprise data platforms, Spark is the backbone for large-scale data processing, whether in Azure Databricks, Synapse, or standalone clusters. A common requirement is to establish a connection between Spark and Azure SQL Database for reading data, persisting results, or enabling downstream reporting.

Traditionally, this connection relies on username and password authentication. while this method introduces security challenges. A more secure and modern approach is to use Azure Active Directory (AAD) authentication with a Service Principal. This method eliminates SQL logins, leverages centralized identity, and aligns seamlessly with enterprise security best practices.

This blog’s walkthrough with how to implement this approach in Azure Databricks using the Adal library for token generation.

Why Service Principal Authentication?

A Service Principal acts as an application identity in Azure Active Directory. By granting this identity access to an Azure SQL Database, we avoid creating SQL logins entirely. Instead, Spark authenticates against AAD, acquires an access token, and uses that token to connect.

Key Advantages of Service Principal – Based Authentication:

- No Password → eliminate the risks of leaked secrets.

- Centralized Access Control → Managed via AAD roles, not SQL logins.

- Scalability → Same SP can be reused via multiple environments and workspaces.

- Compliance → Align with enterprise identity governance and least privileged principal.

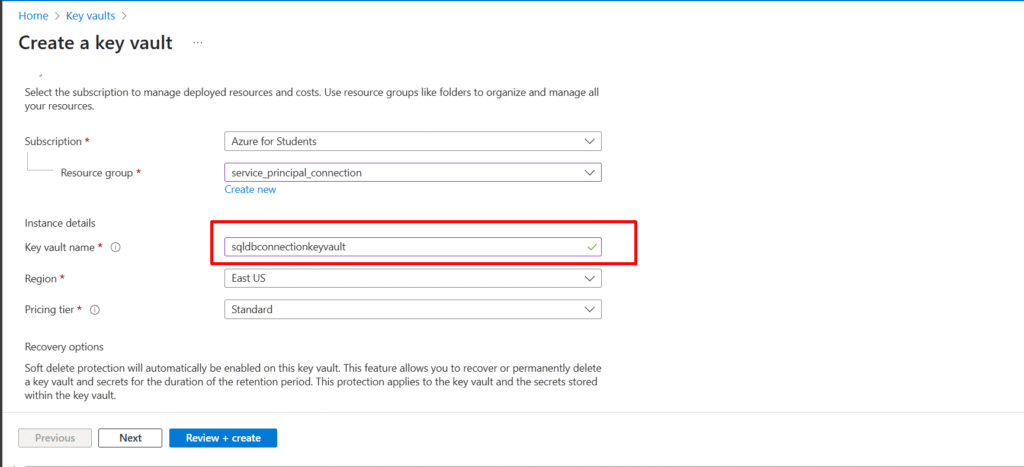

Step 1: Creating the Key Vault

The Key Vault will serve as the central store for your secrets. Start by provisioning a Key Vault in your subscription. In the Azure Portal, navigate to Key Vaults → Create, provide a unique name, select the region and resource group, and create the vault. Once the resource is created, refresh the screen to show the new “key vault” we created.

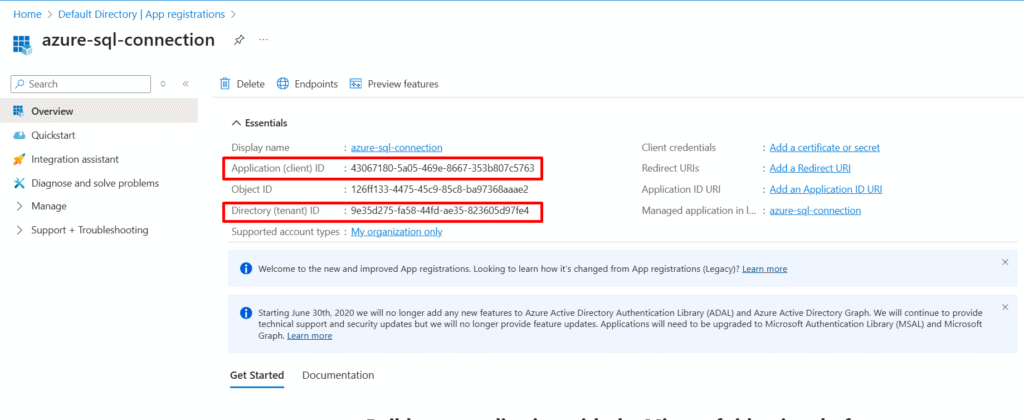

Step 2: Registering a Service Principal

To enable token-based authentication, we need a service principal. Navigate to Azure Active Directory → App Registrations → New registration. Assign a meaningful name (for example, databricks-sql-sp) and scope it to a single-tenant by selecting Accounts in the organization directory.

Once the registration is complete, Azure generates two key identifiers:

- Client (Application) ID — the unique identifier for your Service Principal, which essentially serves as its username.

- Tenant (Directory) ID — the identifier for your Azure AD tenant, which tells Azure where the authentication request should be validated.

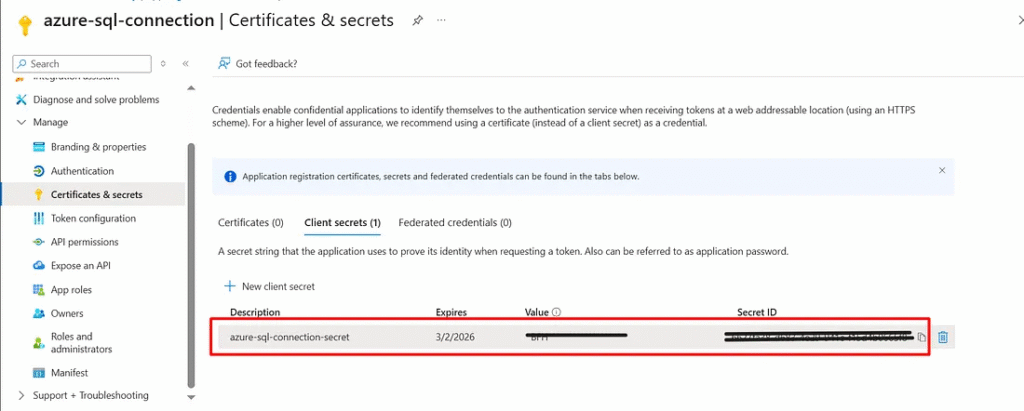

Step 3: Generating the Client Secret

A Service Principal needs a credential to authenticate. Go to App Registration → Certificates & Secrets → New client secret, give it a description, and set an expiry duration.

Azure will generate a Value, which is your Client Secret. This, combined with the Client ID and Tenant ID, allows token acquisition.

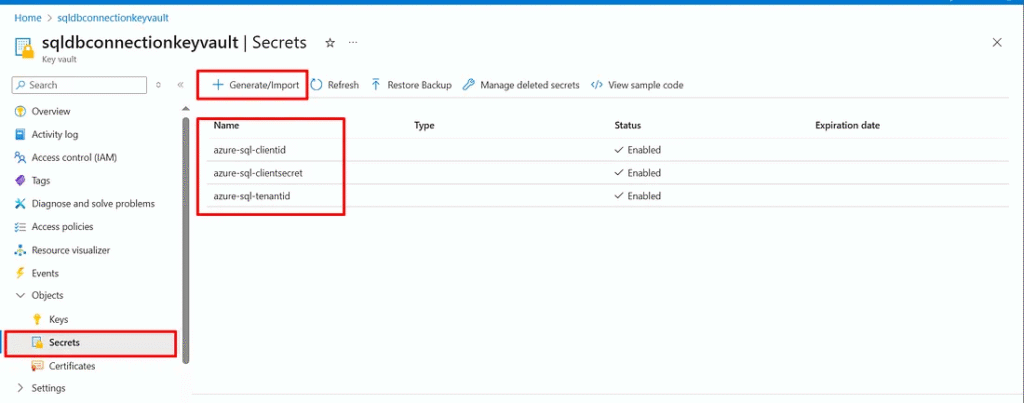

Step 4: Storing Secrets in Key Vault

With the Key Vault ready, add secrets for each of the credentials:

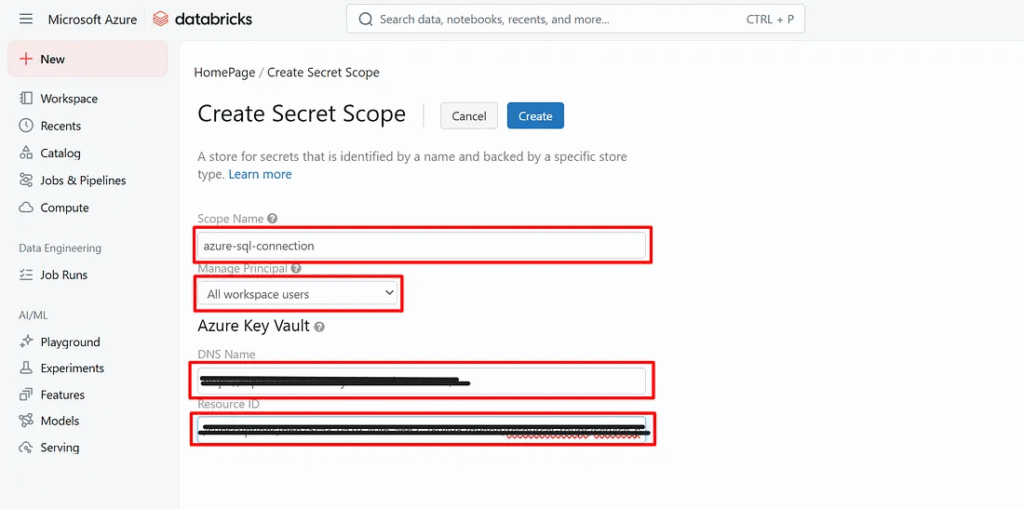

Step 5: Creating the Secret Scope in Databricks

To enable Databricks to securely access the service principal from the Azure Key Vault Secret Scope is important. A secret scope acts as a bridge between Azure Key Vault and Databricks. By configuring it with the key vault DNS name and resource ID. Databricks can fetch the credentials like client-id, client-secret, and tenant-id.

- Go to your Databricks workspace URL (e.g.,

https://<your-instance>. azuredatabricks.net). - Append

/secrets/createScopeto the URL (e.g.,https://<your-instance>.azuredatabricks.net#secrets/createScope). - Enter the Scope Name and DNS Name, and Resource from Azure Key Vault.

dbutils.secrets.get(scope="scope_name", key="client-id")

Step 6: Creating Azure AD Users and Granting Database Access

Once the Service Principal has been registered in Azure AD, it also needs to be recognized inside the target Azure SQL Database. This is achieved by creating an external user based on the Service Principal and assigning the appropriate permissions.

CREATE USER [AAD_Name] FROM EXTERNAL PROVIDER WITH DEFAULT_SCHEMA = [dbo];<br>GO<br>GRANT SELECT ON SCHEMA :: dbo TO [AAD_Name]; Step 7: Connecting from Databricks to Azure SQL DB

Once the Service Principal is registered, the key vault is configured, and the database user is created with the right permissions. The final step is to enable Databricks to connect to Azure SQL DB

pip install adal<span style="font-family: -apple-system, BlinkMacSystemFont, &quot;Segoe UI&quot;, Roboto, &quot;Helvetica Neue&quot;, Arial, &quot;Noto Sans&quot;, sans-serif, &quot;Apple Color Emoji&quot;, &quot;Segoe UI Emoji&quot;, &quot;Segoe UI Symbol&quot;, &quot;Noto Color Emoji&quot;; font-size: 1rem; text-wrap-mode: wrap;"> </span>import adal&amp;lt;br># Function to generate Access Token using Service Principal credentials&amp;lt;br>def getAccessToken(client_id, client_secret, tenant_id, database_host, database_port, database_name):&amp;lt;br> resource_app_id_url = "https://database.windows.net/"&amp;lt;br> context = adal.AuthenticationContext(f"https://login.windows.net/{tenant_id}")&amp;lt;br> token = context.acquire_token_with_client_credentials(resource_app_id_url, client_id, client_secret)&amp;lt;br> return token["accessToken"]&amp;lt;br># Function to generate Azure SQL URL&amp;lt;br>def getSqlUrl(database_host, database_port, database_name):&amp;lt;br> return f"{database_host}:{database_port};databaseName={database_name};"&amp;lt;br># Function to read Azure SQL DB using Service Principal credentials&amp;lt;br>def readSqlDbUsingSP(database_host, database_port, database_name, sql_query, client_id, client_secret, tenant_id, encrypt):&amp;lt;br> # Generating Azure SQL URL&amp;lt;br> sql_url = getSqlUrl(database_host, database_port, database_name)&amp;lt;br> # Generating Access Token&amp;lt;br> access_token = getAccessToken(client_id, client_secret, tenant_id, database_host, database_port, database_name)&amp;lt;br> host_name_in_certificate = "*.database.windows.net"&amp;lt;br> df = spark.read &amp;lt;br> .format("jdbc") &amp;lt;br> .option("url", f"jdbc:sqlserver://{sql_url};databaseName={database_name};encrypt={encrypt};trustServerCertificate=false;hostNameInCertificate={host_name_in_certificate};") &amp;lt;br> .option("query", sql_query) &amp;lt;br> .option("accessToken", access_token) &amp;lt;br> .load()&amp;lt;br> return df With this approach:

- The ADAL library handles token acquisition from Azure Active Directory.

- The access token is passed into the JDBC connector instead of a username/password

- SQL DB validates the token and grants access as per the external user we created

Conclusion:

Service Principal–based authentication offers a secure and modern alternative to traditional SQL logins when connecting Databricks to Azure SQL Database. By shifting identity management to Azure Active Directory and storing secrets in Key Vault, this approach eliminates hard-coded credentials, simplifies access governance, and aligns with enterprise security practices. While the initial setup requires careful configuration across Azure AD, SQL DB, and Databricks, the long-term benefits in terms of security, scalability, and compliance far outweigh the complexity. This design ensures your data pipelines remain both robust and enterprise-ready.