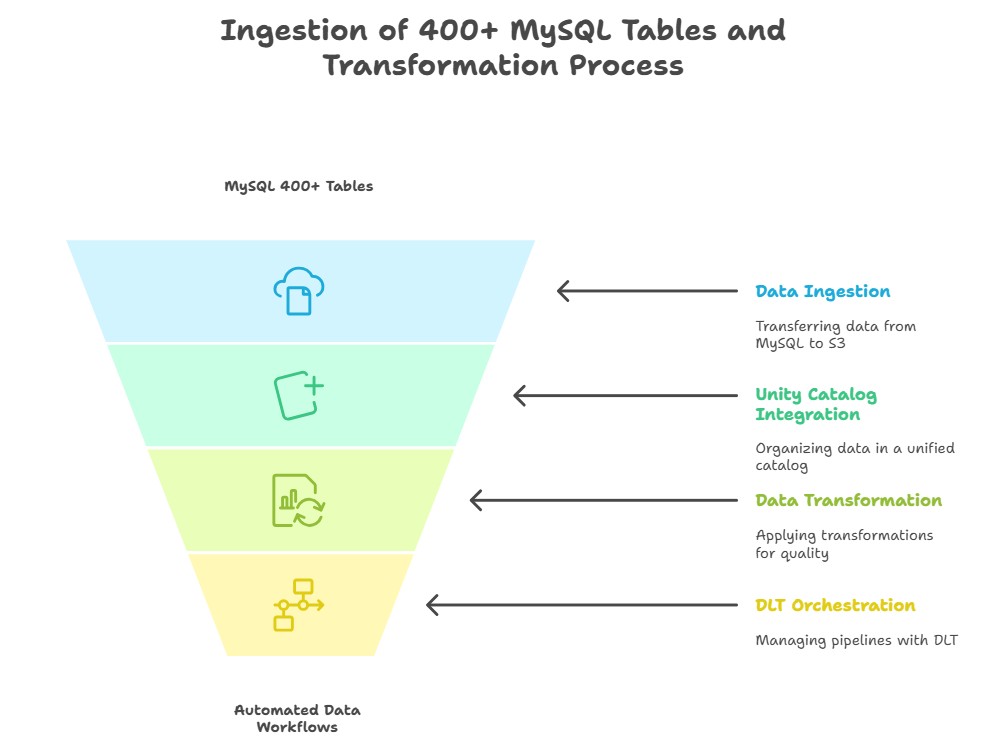

Ingesting and managing data from more than 400 MySQL tables on recurring schedules is a complex challenge. Traditional approaches often lead to pipelines that are difficult to scale, hard to maintain, and prone to failure when handling schema changes or scheduling dependencies. To address these challenges, we designed and implemented a configuration-driven ingestion framework using Delta Live Tables (DLT) in Databricks.

We designed and implemented a Delta Live Tables (DLT)–based ingestion framework in Databricks to streamline and automate this process. The solution ingests data from S3 into Unity Catalog, where transformations are applied to ensure data quality, consistency, and standardization. By applying DLT’s declarative approach, we focused on defining transformation logic while the platform handled orchestration, scaling, and monitoring. In this blog, we can see that the pipelines are scheduled and managed through Databricks Jobs, enabling seamless end-to-end automation of data workflows.

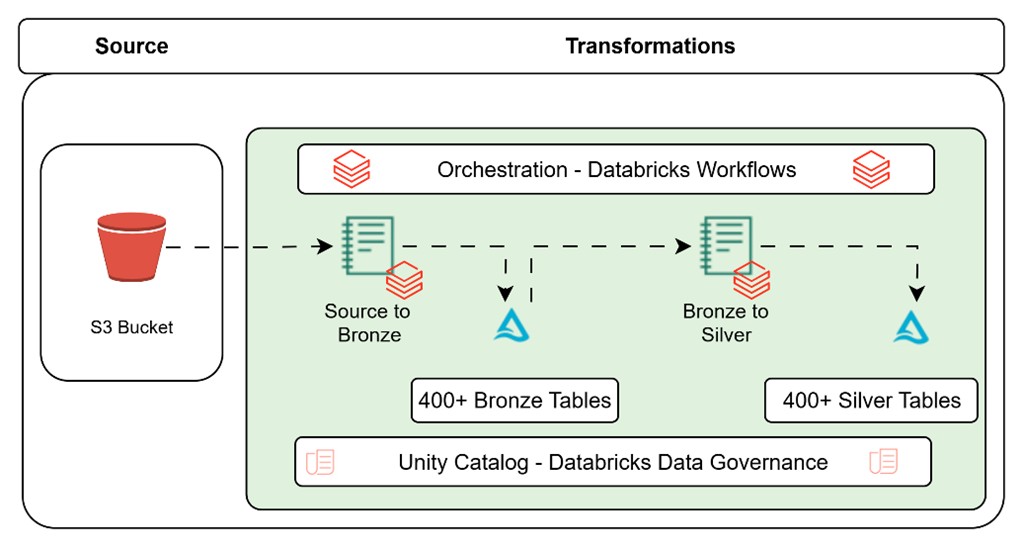

Architecture:

Source & Ingestion Flow:

Data from MySQL is first exported in Parquet format to an external S3 bucket, which is mounted in Databricks for direct access by our pipelines. From there, ingestion follows a multi-layered approach.

In the Bronze layer, raw source data is ingested into Delta tables registered in Unity Catalog. We leverage Auto Loader in streaming mode to ensure scalable ingestion with automatic schema evolution, while maintaining separate schema tracking paths for each source database to avoid conflicts. In the Silver layer, the bronze data is transformed into analytics-ready datasets through processes such as standardization, enrichment, cleansing, and application of business rules. These curated datasets are then stored in dedicated silver schemas in Unity Catalog, making them ready for downstream analytics and reporting.

Why Delta Live Tables?

With hundreds of tables to manage, manually creating and maintaining pipelines is not practical. Delta Live Tables (DLT) addresses this challenge by offering declarative transformations, where you define what you want rather than how to run it. It provides flexibility to handle both streaming and batch workloads—streaming is typically used for bronze ingestion, while silver transformations can run in either batch or streaming mode. DLT also simplifies schema management with Auto Loader, which automatically tracks and evolves schemas. In addition, it supports data governance by tagging tables with quality levels such as Bronze and Silver.

Configuration-Driven Design:

Instead of hardcoding table logic, we use a central configuration file that defines parameters for every table.

The configuration file stores parameters for each table required to create the bronze and silver layers. Each table’s configuration is represented as a dictionary containing details like catalog name, database name, source path, and schema location, bronze and silver path. (e.g. table – buyer_order_details)

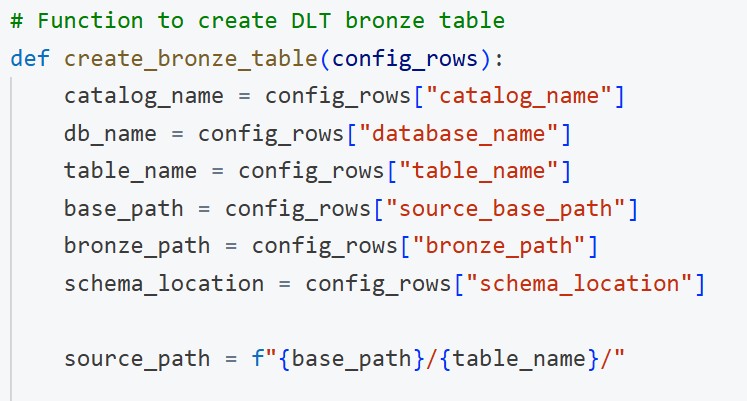

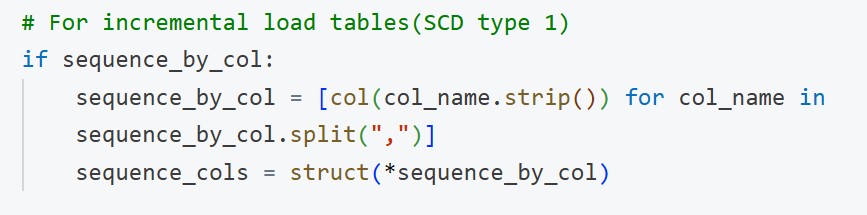

In the create_bronze_table(config_rows) function:

config_rows is a dictionary containing configuration values for a single table.

Values are extracted from config_rows using keys like:

catalog_name – defines the Unity Catalog catalog to use.

database_name – specifies the source database name.

table_name – indicates the specific table to process.

source_base_path – the base path to the source data (e.g., S3 ).

bronze_path – the destination path for storing the bronze table.

schema_location – the path where Auto Loader stores schema inference metadata.

source_path is dynamically constructed by combining base_path and table_name.

This dynamic extraction from the configuration file ensures flexibility and reusability across multiple tables without hardcoding any values.

For Silver layer:

In the create_silver_table(config_rows, table) function:

config_rows contains all the configuration values for the given table.

Key values are extracted as follows:

catalog_name: The Unity Catalog catalog to register the Silver table.

database_name: The source database from which the data originates.

table_name: Name of the specific table being processed.

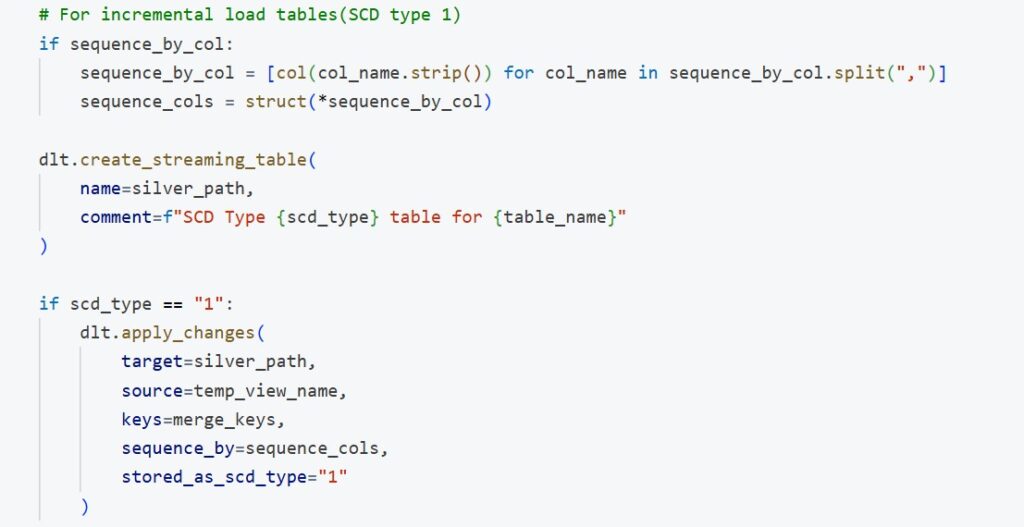

scd_type: Indicates which Slowly Changing Dimension (SCD) strategy (e.g., Type 1 or Type 2) to apply.

primary_key: A comma-separated list of primary key columns used for deduplication or merge logic.

merge_keys: A cleaned list derived from primary_key to use in merge operations.

sequence_by_col: The column used to order records for SCD logic (e.g., updated_at, dmsTimestamp).

bronze_path: The input path where the bronze table data is stored.

silver_path: The output path where the transformed silver table will be written.

This structured configuration ensures that the silver layer transformation logic remains consistent, reusable, and easy to manage across hundreds of tables.

Scheduling & Orchestration:

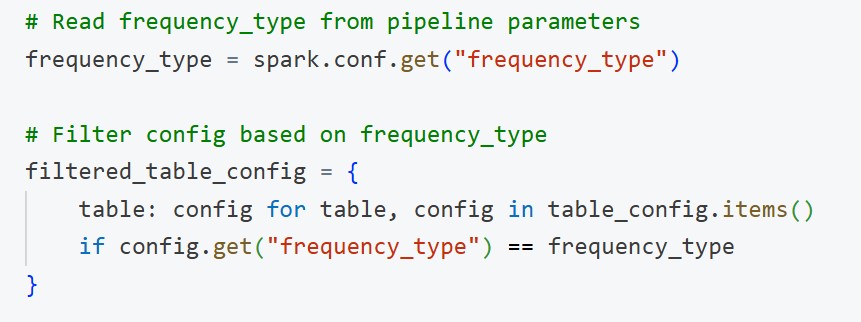

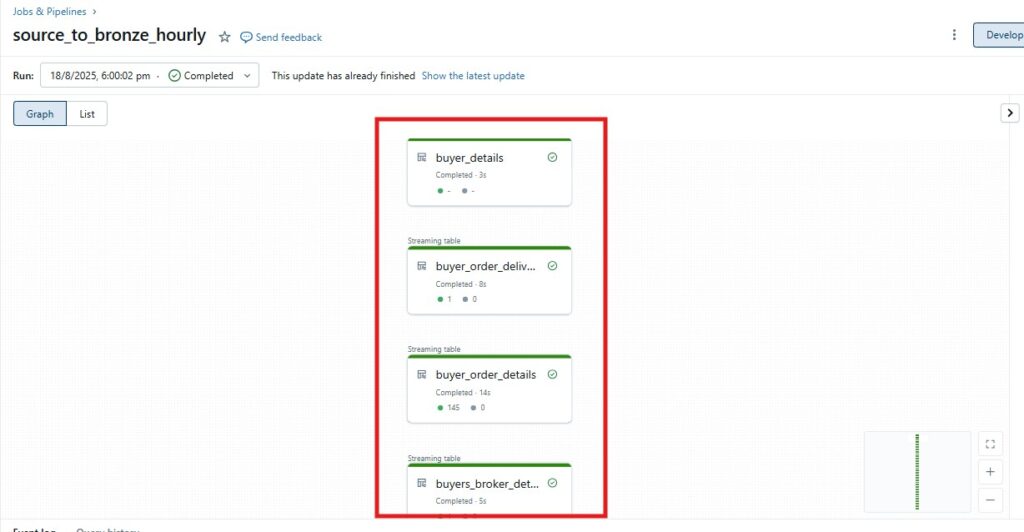

We split workloads into two pipelines per frequency type:

1. Source-to-Bronze – Reads from S3 and lands data in bronze tables.

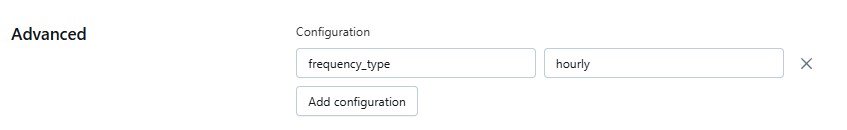

Dynamic Table Selection – Pipeline reads table_config and filters tables at runtime using frequency_type (hourly, daily, weekly, etc.) for targeted ingestion.

This allows the pipeline to only ingest the subset of tables matching the desired schedule (hourly, twice a day, daily, weekly), enabling targeted and scalable ingestion.

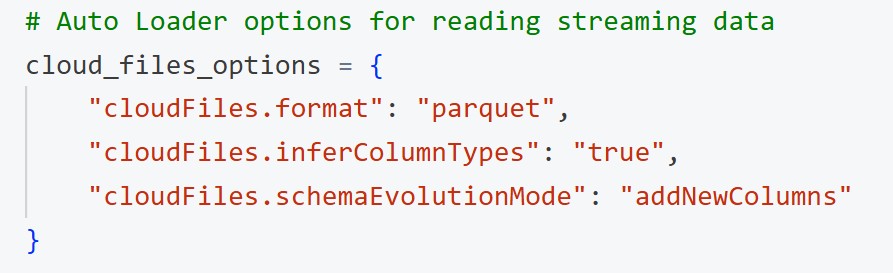

2. Streaming Ingestion with Auto Loader – Reads data from S3 in Parquet format with automatic schema inference and evolution (addNewColumns).

3. Schema Tracking per Table – Uses dedicated schema_location paths to avoid schema conflicts.

4. Custom Auto Loader Options – Creates a copy of default options per table to store schema history consistently.

5. DLT Table Creation with Properties – Sets Delta table properties like columnMapping.mode, min reader/writer versions, and quality tags.

- “delta.columnMapping.mode”: “name” allows Delta Lake to track columns by their names instead of their positions, which helps handle schema changes more reliably.

- “minReaderVersion“: “2” enforces that readers must use Delta Lake version 2 or higher to access the table, ensuring support for advanced features.

- “minWriterVersion“: “5” ensures that only writers with Delta Lake version 5 or higher can write to the table, which supports features like column mapping and schema evolution.

- Here “minReaderVersion“: “2” and “delta.minWriterVersion“: “5” also ensures to store the column names as it is from the source S3 to bronze layer.

6. Modular Multi-Table Processing – Iterates over each table in table_config and uses create_bronze_table(config) for scalable ingestion.

Bronze serves as the raw, structured data layer ready for silver transformations.

Bronze-to-Silver – Cleans and transforms bronze data into silver tables.

- Dynamic Silver Table Selection – Processes only the required bronze tables based on frequency_type for targeted transformations, the same as the bronze layer.

- Read from Bronze Layer – Uses curated raw data from bronze tables as the transformation source.

- Column Naming Standardization – Converts column names to a consistent format for downstream usability.

- Data Quality Enhancements – Removes unnecessary operational columns to keep datasets clean.

Temporary View Creation:

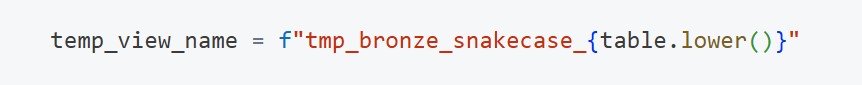

- The temporary view is used to apply column-level transformations, specifically converting column names to snake_case. This view holds the transformed data and serves as the input to the next processing step.

- A dynamic name is created for a temporary view by combining a fixed prefix with the lowercase table name

This ensures each table has a unique temp view during transformation.

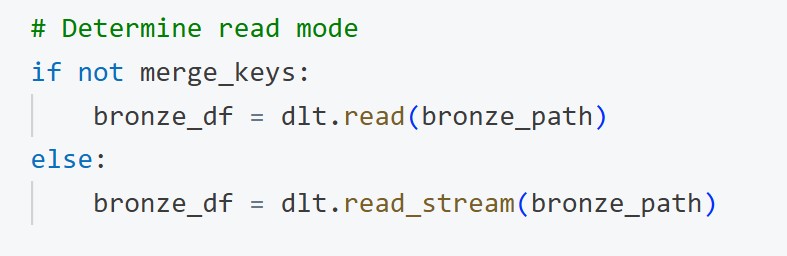

- This logic determines how the bronze table is read:

If there are no merge keys, it’s a full load, so it uses dlt.read() (static batch read).

- If merge keys are present, it’s an incremental load, so it uses dlt.read_stream() (streaming read).

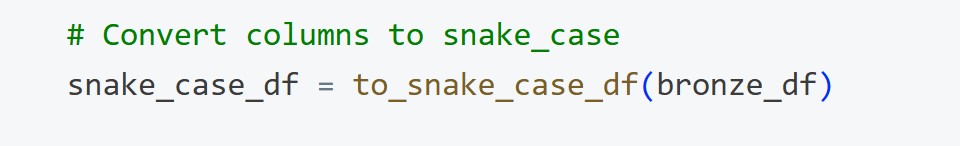

- The DataFrame from the Bronze layer is passed through a function (to_snake_case_df) that renames all column names to snake_case format.

- This standardizes column naming before moving to the silver layer.

- The transformed DataFrame (snake_case_df) is registered as a temporary view using the name created earlier.

- This is necessary because:

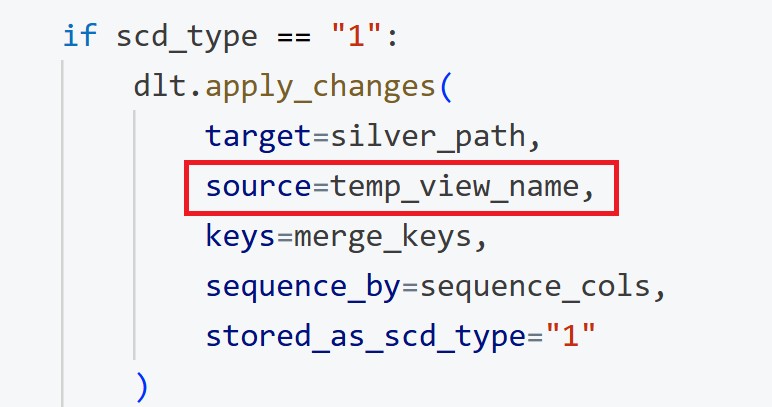

- The dlt.apply_changes() function (used for SCD handling) requires a table or view name for the sequence_by parameter.

- You cannot pass a raw DataFrame directly into sequence_by.

- By creating a temp view, you can reference it by name in SCD logic like this:

- Since the sequence_by parameter in apply_changes() requires a table or view name (not a DataFrame), the transformed DataFrame is registered as a temporary view and referenced by name during the SCD operation.

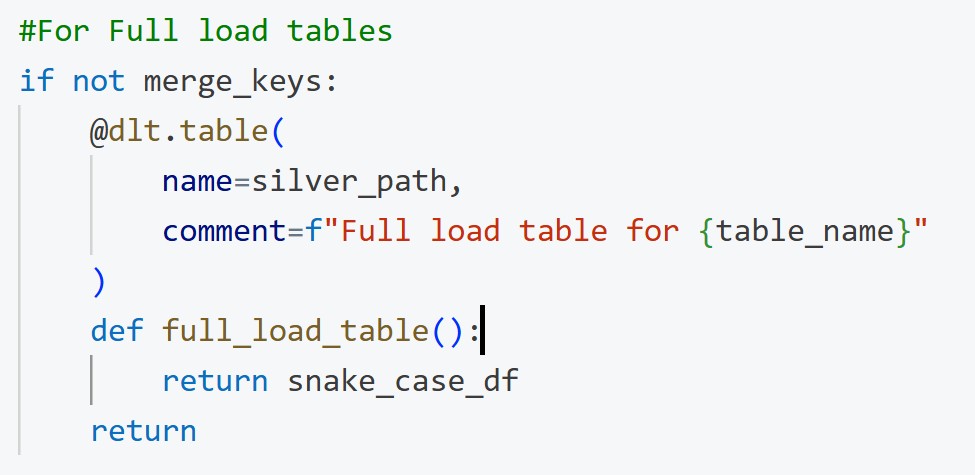

Full Load vs. Incremental Load Detection

Tables without a defined primary key are treated as full load tables and are written directly to the silver layer without change tracking.

- Tables with primary keys are processed incrementally using change data capture (CDC) logic.

SCD1 Implementation – Keeps only the latest record per primary key for an up-to-date dataset.

Modular Multi-Table Processing – Iterates over each table in table_config and uses create_silver_table(config) for scalable ingestion.

Dedicated Silver Schemas in Unity Catalog – Stores transformed datasets in Unity Catalog for secure, governed access.

Conclusion:

By combining Delta Live Tables (DLT) with a configuration-driven architecture, we successfully addressed the challenge of running pipelines for 400+ MySQL tables without operational chaos. This approach provides scalability, as adding a new table requires only a configuration entry, and maintainability, since centralized logic reduces the need for widespread changes when requirements evolve. It also improves resilience, with Auto Loader handling schema drift gracefully, and enhances governance by keeping all datasets in Unity Catalog for secure and audited access. The key to achieving this lies in keeping transformation logic generic, driving table-specific details from configuration, and orchestrating workloads cleanly with dependencies. Together, these practices ensure reliability today while leaving room for future expansion.