Introduction:

In the world of big data, real-time data processing is becoming a necessity rather than a luxury. Businesses today need insights as soon as the data is generated. In this blog, we will walk through building a real-time streaming pipeline using Kafka (as a producer), Azure Event Hub (as the broker), and Delta Live Tables (DLT) in Databricks (as the consumer). We’ll also demonstrate how to handle data durability and record updates efficiently.

What is Streaming Data?

Streaming data refers to continuous data generated by various sources like IoT devices, apps, transactions, or logs. Unlike batch data, streaming data is processed in real time or near real time, which enables quicker decision-making.

Examples of streaming data:

- User activity logs

- Financial transactions

- Sensor readings

- E-commerce order flows

Core Components Explained

Kafka (Producer):

Kafka is a distributed streaming platform that lets you publish and subscribe to streams of records. It acts as a highly scalable and fault-tolerant messaging system. In this blog, we used the confluent_kafka Python library to simulate a Kafka producer that sends data to Azure Event Hub.

Internal Working of Kafka Producer:

When you call producer , Kafka’s client library buffers the message in memory. It batches multiple messages to improve throughput before sending them to the broker. The flush() method forces any remaining messages to be sent immediately. Kafka’s replication ensures that once a broker acknowledges the message, it is safely written to disk and replicated to other brokers, protecting against data loss.

Azure Event Hub (Streaming Broker):

Event Hub is a fully managed, real-time data ingestion service from Azure that can receive and process millions of events per second. It works similarly to Kafka topics. It’s ideal for streaming scenarios and integrates well with Azure and Databricks.

- Retention period: Default is 1 day, can be extended up to 90 days.

- Use case: Acts as the intermediate buffer to store Kafka messages temporarily.

System Internals of Azure Event Hub:

Event Hub stores incoming messages in partitions for parallel processing. Internally, it uses a distributed commit log like Kafka. Each partition has its own sequence of offsets, which consumers track to ensure exactly-once or at-least-once processing. Event Hub replicates data within the Azure region for high durability. The Capture feature writes raw streaming data automatically to Azure Storage or ADLS, which provides long-term retention beyond the Event Hub retention window.

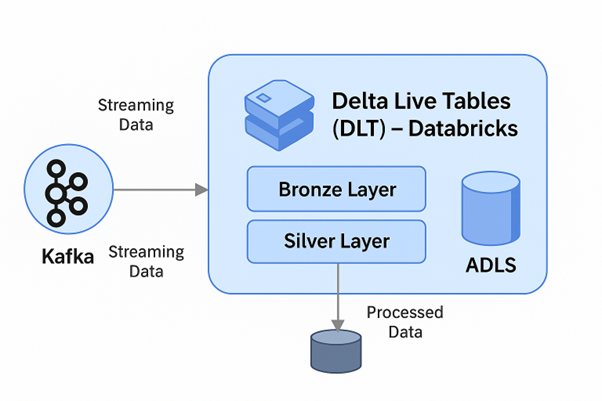

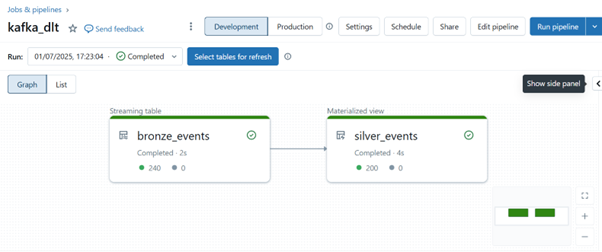

Delta Live Tables (DLT) – Databricks (Consumer):

DLT is a framework for building reliable, automated, and production-ready streaming pipelines. It simplifies ingestion, transformation, and orchestration of data workflows.

- Bronze Layer: Ingests raw streaming data from Event Hub.

- Silver Layer: Transforms and deduplicates/updates the data.

Internal Working of Delta Live Tables (DLT):

DLT runs on top of Apache Spark Structured Streaming. It continuously reads data in micro-batches, tracking the streaming state using checkpoints stored in a Delta log. For deduplication and updates, DLT manages metadata about previously seen records. Transactions are atomic, meaning partially written batches won’t corrupt downstream tables. If the pipeline fails, DLT can recover exactly where it left off using the checkpoint and transaction log.

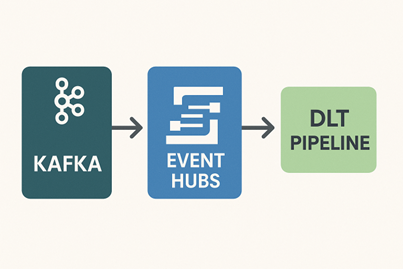

Architecture:

Steps to Ingest Streaming Data:

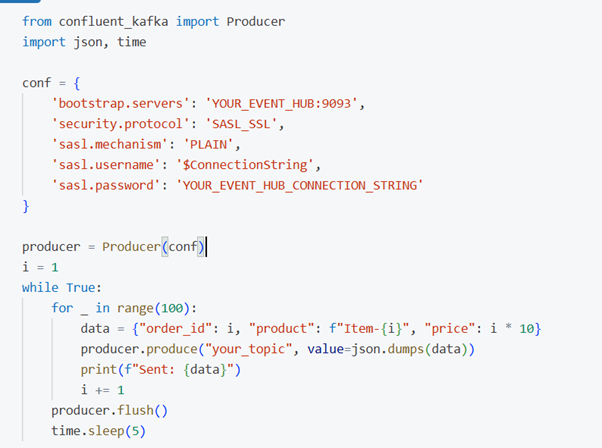

Step 1: Create Kafka Producer (Python in local):

Now the generated data are moved from producer to EventHub

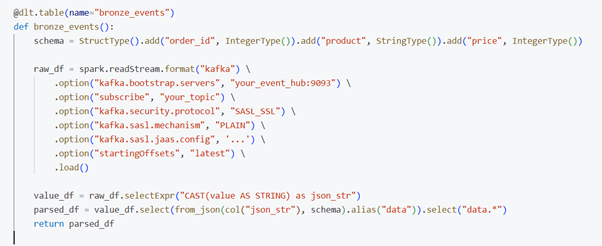

Step 2: Bronze Table (DLT Code in Databricks):

It picks the data from EventHub to Bronze Streaming table

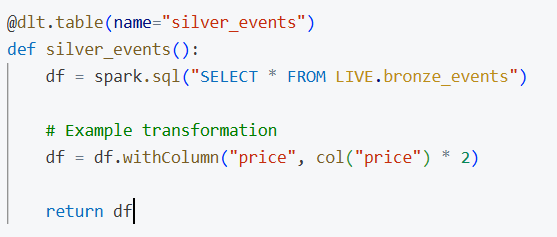

Step 3: Silver Table (+Transformation):

Connect EventHub to ADLS:

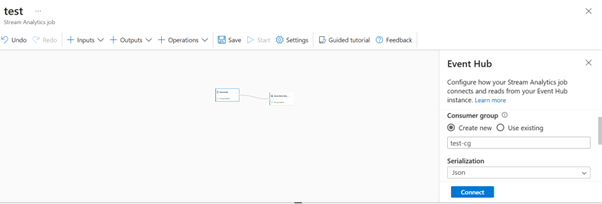

To connect Event Hub to ADLS (Azure Data Lake Storage) and store streaming data, follow these simple steps using Azure Capture feature:

- Go to your Event Hub in Azure Portal.

- Open the Capture tab.

- Turn Capture ON and Choose the format to save

- Choose your ADLS Gen2 storage account and container.

- Click Save.

Conclusion:

By integrating Kafka, Event Hub, and DLT, we created a robust pipeline to ingest and process streaming data in real time. While Event Hub provides easy connectivity and scalability, storing the data in ADLS ensures durability for downstream analytics. This architecture suits modern data engineering needs with flexibility and performance.