Remember when asking Siri to set a timer felt like magic? Today, AI systems are autonomously managing entire business workflows, making strategic decisions, and executing complex multi-step processes without human intervention. The global AI agent market is projected to explode from $5.1 billion in 2024 to $47.1 billion by 2030—but what’s driving this seismic shift from simple chatbots to intelligent agents?

What are LLM-Based Chatbots?

Large Language Model (LLM) chatbots are conversational AI systems that generate human-like text responses based on user inputs. They excel at understanding context, answering questions, and maintaining dialogue, but remain fundamentally reactive—they respond to queries without taking independent action.

What are AI Agents?

AI agents are autonomous systems that can perceive their environment, make decisions, and take actions to achieve specific goals. Unlike chatbots, they possess reasoning capabilities, memory, planning abilities, and can interact with external tools and systems to execute complex workflows.

Key Architectural Differences:

Feature | LLM Chatbots | AI Agents |

Interaction Mode | Reactive (Response-based) | Proactive (Action-based) |

Capabilities | Text generation, Q&A | Task execution, workflow management |

Tool Integration | Limited/None | Extensive API and system integration |

Memory | Session-based only | Persistent, contextual memory |

Decision Making | Query-response patterns | Multi-step reasoning and planning |

Autonomy Level | Human-guided | Semi to fully autonomous |

Core Components of AI Agents:

- Perception: Ability to understand and interpret the environment/inputs

- Reasoning Engine: Advanced problem-solving and decision-making capabilities

- Memory Systems: Both short-term (working memory) and long-term (knowledge retention)

- Action Execution: Integration with external tools, APIs, and systems

- Planning Module: Multi-step task breakdown and execution sequencing

Why This Topic Matters? (Context Setting)

Who should read this? This transformation is crucial for CTOs, Data Engineers, Product Managers, Business Leaders, and AI/ML practitioners who are planning enterprise AI strategies or considering upgrading from basic chatbot implementations.

Industry Relevance:

- BFSI: From customer service bots to autonomous fraud detection and compliance agents

- Manufacturing: Predictive maintenance agents managing entire production workflows

- Retail: Inventory management agents optimizing supply chains in real-time

- Healthcare: Diagnostic support agents coordinating patient care workflows

Current Challenges Without AI Agents: Traditional chatbots create operational bottlenecks because they require constant human intervention for task completion. Organizations report that 67% of chatbot interactions still require human handoff, leading to inefficient workflows and frustrated users. Additionally, chatbots cannot adapt to changing business processes or learn from execution patterns, creating scalability limitations as enterprises grow.

Practical Implementation (Code + Hands-on Guide)

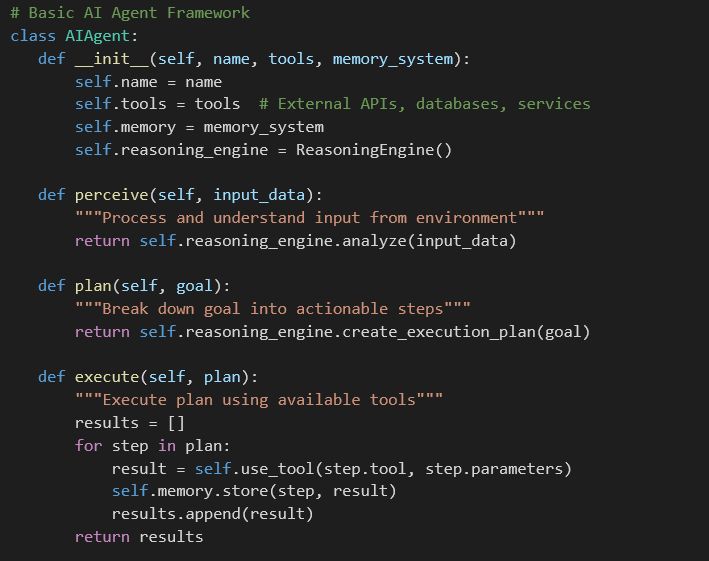

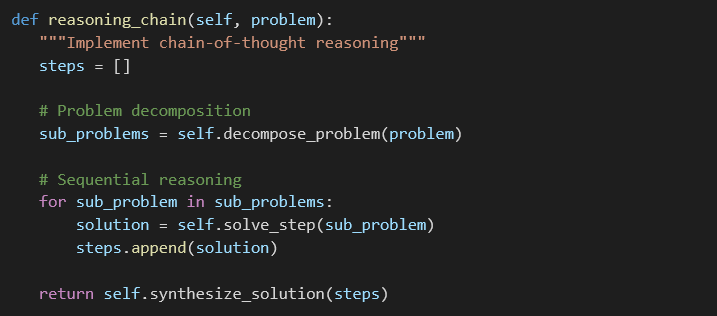

Step 1: Agent Architecture Design

Step 2: Tool Integration

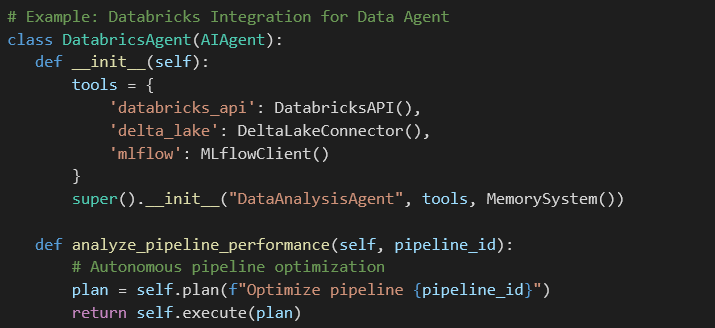

Step 3: Chain-of-Thought Implementation

Performance & Best Practices

Optimization Strategies:

Do’s & Don’ts for AI Agent Implementation:

Do’s | Don’ts |

Implement robust error handling and fallback mechanisms | Deploy agents without human oversight initially |

Use a modular tool architecture for flexibility | Hard-code business logic into agent reasoning |

Implement comprehensive logging and monitoring | Ignore agent decision explainability |

Start with narrow, well-defined use cases | Attempt to solve all problems with one agent |

Establish clear success metrics and KPIs | Deploy without proper security controls |

Cost Optimization Tips:

- Token Efficiency: Use prompt optimization techniques to reduce LLM API costs by 40-60%

- Caching Strategies: Implement intelligent caching for repeated reasoning patterns

- Model Selection: Use smaller models for routine tasks, larger models for complex reasoning

- Batch Processing: Group similar tasks to optimize computational resources

Common Pitfalls to Avoid:

- Over-autonomy: Agents making critical business decisions without proper guardrails

- Tool Sprawl: Integrating too many tools without proper orchestration

- Memory Leakage: Inadequate memory management leading to context confusion

- Security Gaps: Insufficient access controls for agent-system interactions

Industry Use Case / Real-World Application

Manufacturing: Predictive Maintenance Revolution

Before: Aditya Birla Chemicals manually monitored plant sensor data across isolated systems. Maintenance teams reactive responded to equipment failures, resulting in 15-20% unplanned downtime and $2M annual losses.

After: Deployed autonomous maintenance agents that:

- Continuously analyze IoT sensor streams from 500+ equipment points

- Predict failures 72 hours in advance with 89% accuracy

- Automatically schedule maintenance, order parts, and allocate technician resources

- Result: Reduced unplanned downtime by 30% and saved $600K annually

BFSI: Autonomous Fraud Detection

Implementation: HDFC Bank deployed fraud detection agents that:

- Monitor transaction patterns across 40M+ customers in real-time

- Automatically investigate suspicious activities using multiple data sources

- Execute immediate response actions (card blocking, customer notifications)

- Learn from false positives to improve detection accuracy

Retail: Dynamic Supply Chain Optimization

Case Study: Flipkart’s inventory management agents:

- Predict demand fluctuations using 200+ variables (weather, events, trends)

- Automatically adjust procurement orders and distribution strategies

- Coordinate with logistics partners for optimal delivery routing

- Impact: Reduced inventory holding costs by 25% while improving delivery times

Future Trends & Roadmap

The Evolution Trajectory:

2025 Developments:

- Multi-Agent Systems: Teams of specialized agents collaborating on complex enterprise workflows

- Self-Improving Agents: Systems that autonomously update their capabilities based on performance feedback

- Industry-Specific Agents: Pre-trained agents for vertical markets (FinTech, HealthTech, etc.)

Emerging Capabilities:

- Emotional Intelligence: Agents that understand and respond to human emotional contexts

- Cross-Platform Integration: Seamless operation across cloud, on-premise, and edge environments

- Regulatory Compliance: Built-in compliance monitoring and reporting capabilities

Databricks Roadmap Integration:

Databricks is developing “AI Agent Workspaces” that will enable:

- Native agent deployment on Databricks clusters

- Direct integration with Unity Catalog for governance

- MLflow-based agent performance tracking and optimization

- Delta Live Tables for real-time agent decision logging

Technology Convergence:

The future points toward “Agentic Data Platforms” where AI agents become the primary interface for data operations, replacing traditional dashboard-driven analytics with autonomous insights and actions.

Key Takeaway:

The transition from chatbots to AI agents isn’t just a technological upgrade—it’s a fundamental shift toward autonomous enterprise operations. Organizations that embrace this transformation now will gain significant competitive advantages in operational efficiency, decision-making speed, and scalability. The question isn’t whether to make this transition, but how quickly you can implement it strategically.

References:

- Market Research: AI Agent Market Projections 2024-2030

- Databricks Summit 2024: Agent Architecture Announcements

- Enterprise Case Studies: Manufacturing and BFSI Implementations